Your monolith was a hero. It launched your MVP, got you to product market fit, and handled everything you threw at it. But now, it's sending you smoke signals. API response times are creeping up, a minor bug in one module takes down the entire system, and deploying a simple feature has become a week long ritual of fear and coffee. It feels like the system is warning you that the tightly coupled, synchronous world it was built for is holding back your growth.

I've been there. You're at that exact point where so many engineering teams get stuck. The path forward seems complex, filled with jargon like Kafka, SQS, and RabbitMQ. You know you need a more resilient, scalable, and decoupled system, but the jump from theory to practice feels vast. What does an event driven architecture actually look like for a real world e commerce platform, a fraud detection engine, or an IoT data pipeline? How do you handle failures, ensure data consistency, and observe a system that operates asynchronously?

This article cuts through the noise. We are not going to talk about abstract theory. Instead, we are going to walk through tangible, production grade event driven architecture examples you can learn from and adapt. For each example, we will look at the flow, explore the message formats, and discuss the tricky parts like idempotency, retries, and scaling. You will see how to move from a synchronous request response model to a more robust, asynchronous flow that can handle modern workloads. This is your practical guide to evolving your architecture before those smoke signals turn into a full blown fire.

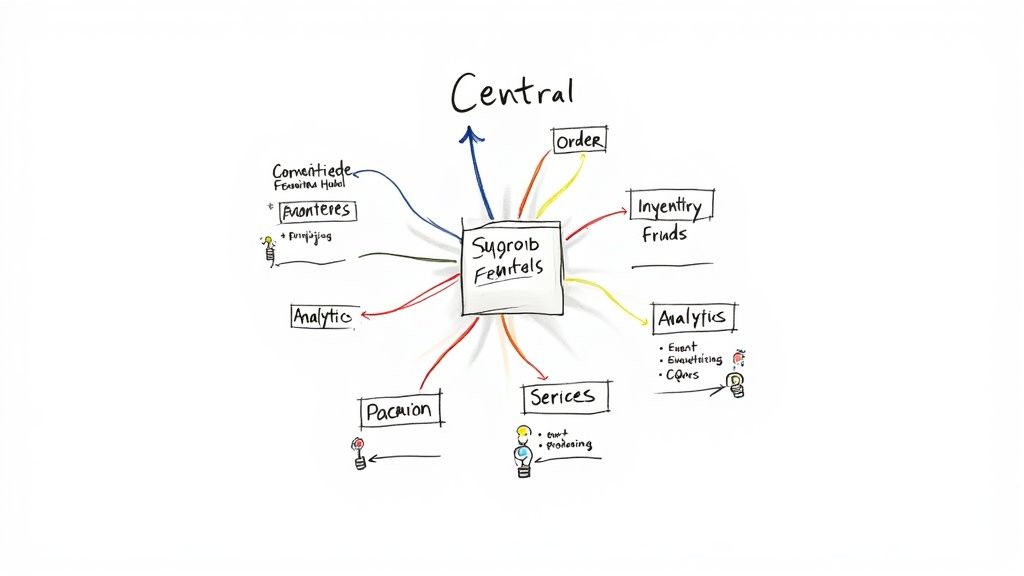

1. E commerce Order Processing

Handling a customer's order on an e commerce platform is a classic but powerful place to see event driven architecture in action. When a customer clicks "Buy Now," it's not a single, monolithic action. It's the start of a complex workflow involving payment, inventory, shipping, and notifications. A synchronous, tightly coupled system would mean a failure in any one of these downstream services could cause the entire order to fail. I once spent hours debugging a bug where a failing SMS provider was preventing users from completing checkout. It was a painful lesson in coupling.

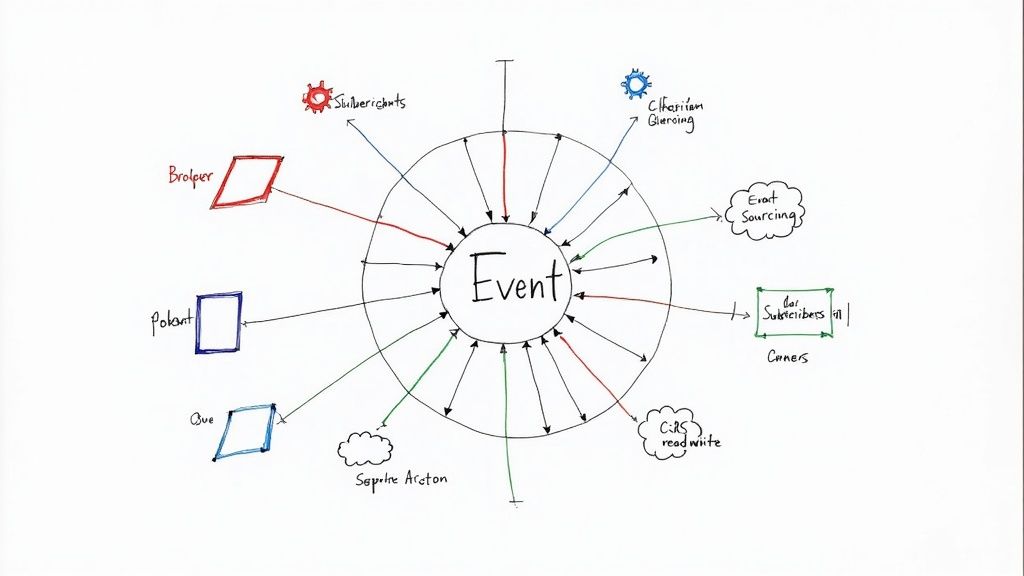

This is where event driven design shines. An OrderPlaced event is published to a message broker like Kafka or RabbitMQ. From there, multiple independent microservices subscribe and react to it.

- Payment Service: Consumes the

OrderPlacedevent, processes the payment, and emits anOrderPaidevent. - Inventory Service: Listens for

OrderPaid, decrements stock levels, and emits anInventoryUpdatedevent. - Shipping Service: Listens for

InventoryUpdated, schedules the shipment, and emits anOrderShippedevent. - Notification Service: Subscribes to

OrderPaidandOrderShippedto send emails or SMS alerts to the customer.

This decoupling is a strategic advantage. If the notification service is temporarily down, the order still gets processed and shipped. Each service can be scaled, updated, and deployed independently, a core tenet of modern microservices best practices. For a deeper look into the mechanics of order fulfillment in an e commerce context, explore this ultimate guide to e commerce order processing.

Strategic Takeaway: Use an event driven approach to decouple your core business transaction (the order) from its side effects (fulfillment). This builds resilience and allows each part of your system, from payment to shipping, to evolve and scale on its own terms without bringing down the entire operation. Ensure handlers are idempotent to prevent duplicate processing if an event is delivered more than once.

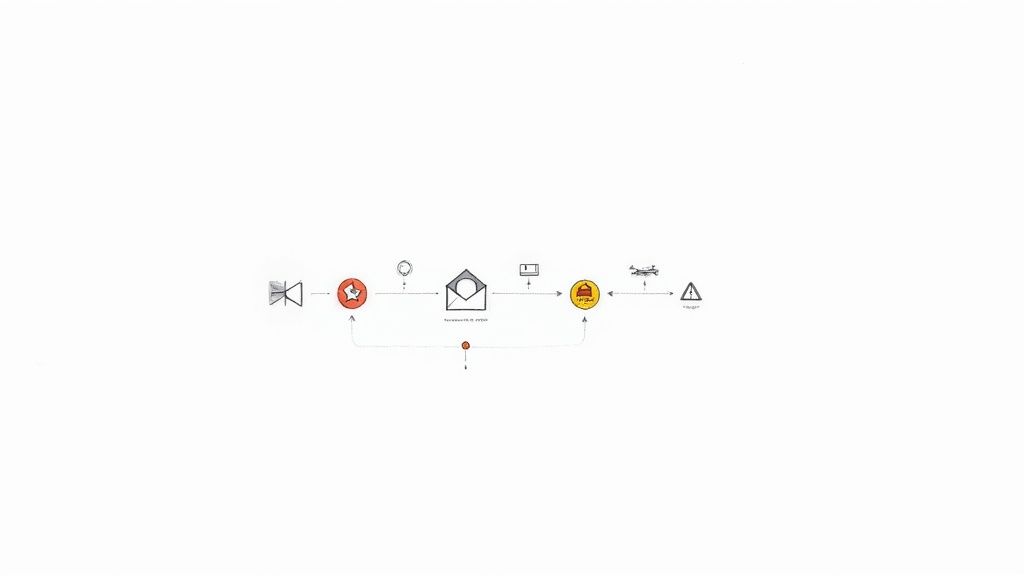

2. Real time Fraud Detection

In the financial world, milliseconds matter. Detecting fraud as it happens, not hours later, is a non negotiable requirement. This is where event driven architecture becomes a mission critical tool. Every time a user swipes a card, makes an online payment, or transfers funds, they trigger a cascade of events. A traditional, monolithic system would struggle to analyze this firehose of data in real time, creating unacceptable delays and exposing both customers and institutions to risk.

Event driven systems flip this model. A TransactionAttempted event is instantly published to a high throughput message bus like Apache Kafka. This single event becomes the trigger for a parallel, asynchronous fraud analysis pipeline. Multiple specialized microservices consume this event simultaneously.

- Rule Engine Service: Consumes

TransactionAttempted, checks the data against a set of predefined fraud rules (e.g., transaction amount, location, frequency), and emits aRuleEngineScoreCalculatedevent with a risk score. - Behavioral Analysis Service: Listens for the same

TransactionAttemptedevent, compares the user's current behavior to their historical patterns, and publishes aBehavioralRiskAssessedevent. - ML Model Service: Feeds the transaction data into one or more machine learning models to predict the probability of fraud, then emits a

MLPredictionGeneratedevent. - Decision Service: Subscribes to the outputs of all three services, aggregates the risk scores, and makes a final decision, publishing an

TransactionApprovedorTransactionDeclinedevent.

This decoupled, stream processing approach allows for immense scalability and sophistication. New fraud detection models can be added without altering the core transaction flow. Within this framework, modern systems leverage sophisticated AI technology for catching chargeback fraud and other complex patterns that rule based systems might miss.

Strategic Takeaway: Use event streams to transform security from a blocking, synchronous check into a parallel, real time data analysis pipeline. This enables you to layer multiple complex detection methods (rules, ML, behavioral analytics) without adding latency to the customer's transaction. The key is to design a "fan out" pattern where a single transaction event triggers multiple independent analytical services concurrently.

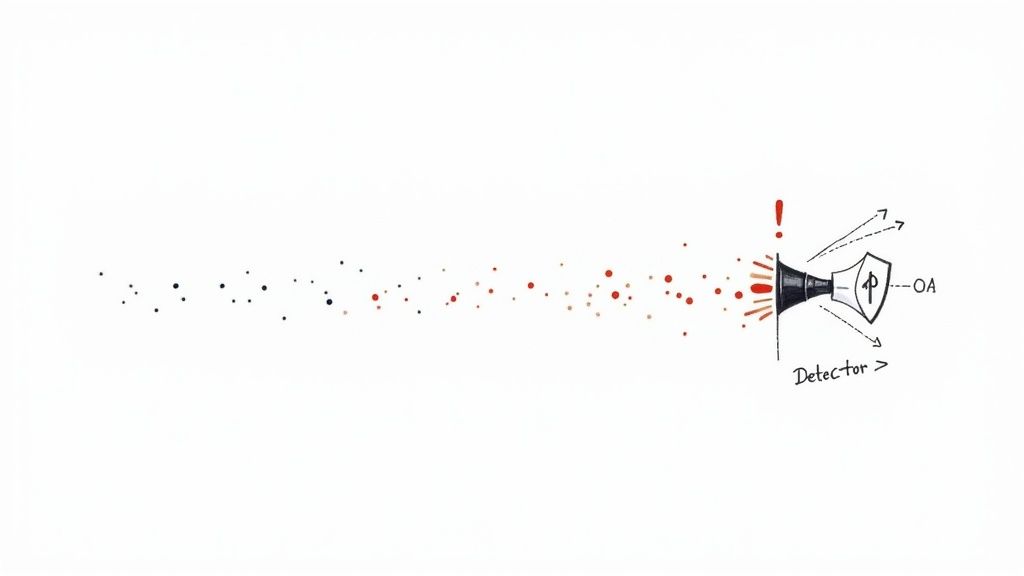

3. Real time Analytics and Data Pipelines

In today's data hungry landscape, businesses can no longer wait for nightly batch jobs to understand what's happening. Real time analytics pipelines, powered by event driven architecture, have become essential for ingesting, processing, and analyzing massive data streams from IoT devices, user clicks, and application logs. Instead of a slow, monolithic ETL (Extract, Transform, Load) process, events flow continuously through a decentralized system. This enables live business intelligence, fraud detection, and immediate operational insights.

This is where event driven architecture provides a seismic shift in capability. An event, such as a UserClickedAd or SensorReadingReceived, is published to a high throughput event stream like Apache Kafka or AWS Kinesis. This single event can then trigger a cascade of independent, parallel processing activities across a data pipeline.

- Ingestion & Validation Service: Consumes the raw event, validates its schema (often using a schema registry), and enriches it with metadata before publishing a clean

ValidatedSensorReadingevent. - Real time Aggregation Service: Listens for validated events, performs in memory aggregations (e.g., calculating average temperature per minute), and pushes results to a live dashboard. This is often handled by stream processing frameworks like Apache Spark or Flink.

- Data Lake/Warehouse Service: Subscribes to the same validated events and archives the raw data into a long term storage solution like a data lake for historical analysis and model training.

- Alerting Service: Monitors the stream for specific patterns or thresholds, like an overheating sensor, and fires an

AnomalyDetectedevent to trigger immediate notifications.

This decoupling allows data engineering teams to evolve each stage of the pipeline independently. If the historical archiving service goes down, real time dashboards and critical alerts are completely unaffected. This model is one of the most powerful event driven architecture examples, forming the backbone of data platforms at companies like Netflix and LinkedIn for everything from recommendation engines to operational monitoring.

Strategic Takeaway: Use event streams to separate the high speed "hot path" (real time dashboards, alerts) from the high volume "cold path" (batch analytics, data warehousing). This ensures that critical, time sensitive insights are never delayed by slower, bulk data processing. Implement robust data quality checks and schema validation at the pipeline's entry point to prevent "garbage in, garbage out" scenarios downstream.

4. IoT Device Management and Monitoring

Managing millions of connected devices in an Internet of Things (IoT) ecosystem is a perfect scenario for event driven architecture. Imagine a smart factory floor or a city wide network of environmental sensors. Each device constantly emits data: temperature readings, motion detection, status updates. Trying to poll each device individually would be a catastrophic failure of scale. A synchronous request response model simply cannot handle the sheer volume and velocity of these data streams.

This is where an event based approach becomes essential. Each piece of sensor data is treated as an event, published to an event bus like Apache Kafka or AWS IoT Core using lightweight protocols like MQTT. Multiple downstream services can then subscribe to these event streams to perform specialized tasks in parallel.

- Data Ingestion Service: Consumes the raw

SensorDataReceivedevent, validates it, and forwards it for processing. - Real time Analytics Service: Listens for validated data events to detect anomalies, like a sudden temperature spike, and emits a

HighTemperatureAlertevent. - Dashboard Service: Subscribes to aggregated data streams to update live monitoring dashboards for human operators.

- Actuator Control Service: Reacts to alert events, such as

HighTemperatureAlert, by sending a command event likeTriggerCoolingSystemback to a device on the factory floor.

This decoupled architecture ensures that a failure in one component, like the dashboard service, doesn't interrupt critical functions like real time alerting and automated responses. Each microservice can be scaled independently to handle varying loads, a crucial requirement for building a resilient, high availability architecture that actually works. This model is a cornerstone of platforms like Azure IoT Hub and Google Cloud IoT, showcasing one of the most powerful event driven architecture examples in modern technology.

Strategic Takeaway: Treat each device signal as an immutable event. This decouples data producers (sensors) from data consumers (analytics, alerts). It allows your system to process massive, concurrent data streams reliably and trigger automated, near real time responses without creating bottlenecks. Prioritize edge filtering to reduce noise and network traffic before data even hits your central event bus.

5. User Activity Tracking and Personalization

Capturing user interactions in real time is the foundation of modern digital experiences, from Netflix's content suggestions to Amazon's "customers also bought" feature. A monolithic approach would require the core application to be aware of every potential downstream system, from recommendation engines to analytics platforms. This creates a brittle system where a slowdown in an analytics service could impact the user's ability to browse.

This is a prime use case for event driven architecture examples. Every user action, whether a click, a view, or a scroll, becomes a discrete event like ProductViewed or VideoPlayed. These events are fired off into a message broker, like Kafka, allowing various backend systems to consume them asynchronously without affecting the user facing application's performance.

- Analytics Service: Consumes all user interaction events to build dashboards and track key performance indicators.

- Recommendation Engine: Listens for events like

ProductAddedToCartorArticleReadto update its machine learning models and generate personalized suggestions in real time. - Marketing Automation Platform: Subscribes to events like

UserSignedUporSubscriptionCancelledto trigger targeted email campaigns or push notifications. - Data Lake / Warehouse: An event consumer archives all raw events into a long term storage solution for historical analysis, A/B testing insights, and model retraining.

This decoupled architecture ensures the user experience remains fast and responsive, regardless of the processing load on backend analytics or machine learning systems. It allows new services, like a fraud detection system that analyzes click patterns, to be introduced simply by subscribing to the existing event streams. This creates an incredibly flexible and scalable platform for understanding and reacting to user behavior.

Strategic Takeaway: Treat user behavior as a stream of events, not as database records to be queried. This decouples the core user experience from the complex and evolving systems that leverage that data. Always be transparent about data collection, comply with privacy regulations like GDPR, and implement clear consent management and data retention policies.

6. Real time Notification and Alerting Systems

In a connected world, users expect immediate feedback. Whether it's a banking transaction alert, a CI/CD pipeline failure notification on Slack, or a simple order status update, real time alerts are a non negotiable part of the modern user experience. Trying to manage this synchronously is a recipe for disaster; a single slow or failed SMS gateway could halt a critical business process.

This is a prime scenario where event driven architecture examples demonstrate their value. When a significant business event occurs, like a UserLoginFailed or PriceDropDetected, it is published to an event bus. This single event can then trigger a whole ecosystem of notification services, each operating independently and in parallel.

- Email Service: Consumes the event and queues an email via a provider like SendGrid.

- SMS Service: Listens for the same event and sends a text message through Twilio.

- Push Notification Service: Triggers a mobile push alert using Firebase Cloud Messaging or Amazon SNS.

- Collaboration Service: Posts a message to a specific Slack or Microsoft Teams channel for internal alerting.

This decoupling ensures that the core application logic is not burdened by the complexities of multi channel delivery, retries, and provider specific APIs. If the push notification service is down, the user still receives an email and SMS. Each service can be scaled based on its specific load, a crucial aspect of building robust systems. For those looking to build such responsive systems in Python, exploring a tutorial on mastering asynchronous tasks with Celery, RabbitMQ, and Redis can provide a powerful foundation.

Strategic Takeaway: Decouple event triggers from notification delivery mechanisms. This allows you to add, remove, or change notification channels (e.g., adding WhatsApp notifications) without touching the core business logic. Implement user preference services that consume these events and decide which channel a specific user should be notified on, and respect rate limits to avoid overwhelming users with alerts.

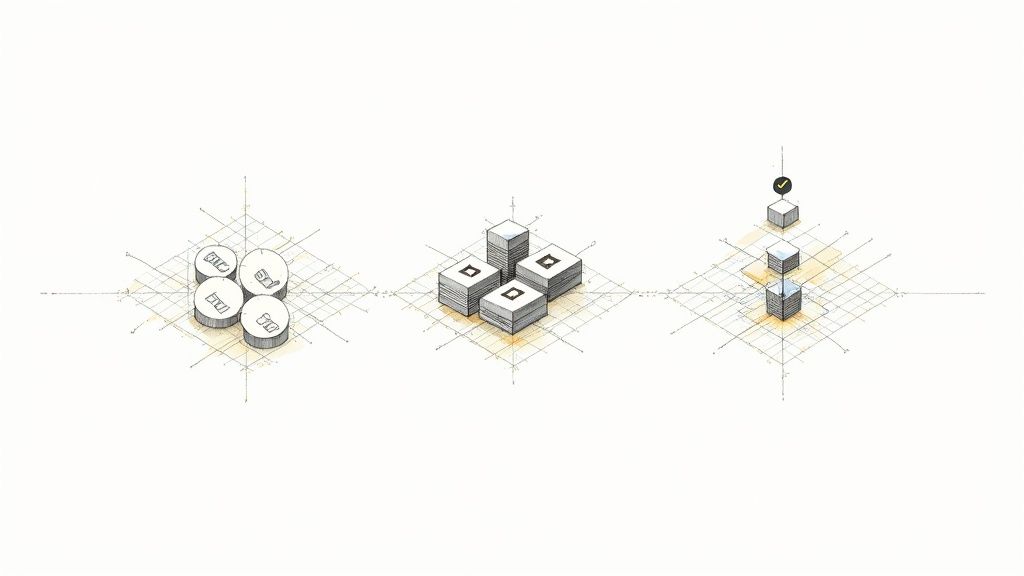

7. Workflow Orchestration and Automation

Complex business processes, like a multi stage insurance claim or a new customer onboarding flow, are often long running and involve numerous steps. A failure at any point can leave the entire process in an inconsistent state. Managing this with tightly coupled services is a recipe for disaster; a single service outage could halt every in flight workflow, creating a massive operational backlog and a terrible customer experience.

This is where orchestrating workflows with event driven architecture becomes a game changer. Instead of services directly calling each other, a central orchestrator or a choreographed set of services reacts to events that represent state transitions. An event like ClaimFiled doesn't just trigger one action; it initiates a durable, stateful workflow that can manage complex logic, including timers, human approvals, and conditional branches.

- Insurance Claim Service: A

ClaimFiledevent triggers the start of a workflow. It might first call a Validation Service. - Validation Service: After validation, it emits a

ClaimValidatedevent. The workflow engine consumes this and proceeds. - Approval Service: The workflow now waits for a

ClaimApprovedorClaimRejectedevent, which could be triggered by a human claims adjuster interacting with a UI. - Payment Service: Upon receiving

ClaimApproved, the workflow triggers this service to issue a payment and emit aClaimPaidevent, concluding the process.

This model, popularized by tools like AWS Step Functions and Temporal, provides immense visibility and resilience. You can see exactly where each workflow is, handle failures with built in retry logic, and implement compensation actions for failed steps. It's one of the most powerful event driven architecture examples for managing business logic that spans multiple services and timeframes. You can explore a deeper analysis of related patterns and see how they contribute to building scalable apps that don't break.

Strategic Takeaway: For long running, multi step business processes, use event driven orchestration to manage state and logic. This decouples the process flow from the individual microservices executing the tasks. Implement robust observability from day one to track workflow state, and use dead letter queues to handle workflows that get permanently stuck, ensuring no process is ever truly lost.

8. Log Aggregation and Centralized Monitoring

In a distributed system with dozens or even hundreds of microservices, trying to troubleshoot an issue by checking individual log files on separate servers is a nightmare. It's like trying to find a specific needle in a continent full of haystacks. Centralized logging isn't just a convenience; it's a foundational practice for maintaining observability and sanity in a complex environment. A monolithic application might write to a single file, but microservices demand a different approach.

This is where event driven architecture provides an elegant solution. Every log entry, from a simple informational message to a critical error, is treated as an event. Applications and infrastructure components are configured to emit these log events to a centralized data pipeline or message broker. This stream of events is then consumed by a dedicated logging platform for aggregation, indexing, and analysis.

- Log Emitters: Your applications, servers, and containers are configured with agents (like Filebeat or Fluentd) that tail log files or capture standard output, format the entries into structured events (often JSON), and forward them.

- Event Ingestion Layer: A high throughput system like Kafka or a dedicated log shipper like Logstash receives this massive volume of log events. It can perform initial filtering, enrichment (e.g., adding geographic data based on an IP address), and routing.

- Indexing and Storage: A powerful search engine like Elasticsearch consumes the processed log events, indexes them for fast querying, and stores them.

- Analysis and Visualization: Tools like Kibana or Grafana provide a user interface to search, aggregate, and create dashboards from the indexed logs, allowing engineers to spot trends and diagnose issues in real time.

This decoupled architecture, famously known as the ELK Stack (Elasticsearch, Logstash, Kibana), is a prime example of event driven design in operations. Platforms like Datadog, Splunk, and New Relic have built sophisticated businesses on this exact model. The key is that the services producing the logs don't need to know or care about where they end up; they just fire off the events.

Strategic Takeaway: Treat logs as events, not as static files. By creating a centralized, event driven pipeline for your logs, you decouple observability from your application logic. This allows you to build powerful, real time monitoring and alerting systems that can scale independently of your core services. Always use structured logging (e.g., JSON) so your events have a consistent schema, making them dramatically easier to query and analyze.

9. Supply Chain and Logistics Tracking

Tracking physical goods across a global supply chain, from a factory to a customer's doorstep, is an incredibly complex orchestration. A traditional, request response system would struggle to provide real time visibility. Delays, customs holds, and warehouse transfers would be reported in batches, leaving stakeholders blind to the current state of shipments. This latency can lead to costly rerouting, missed delivery windows, and poor customer satisfaction.

This is a prime scenario where event driven architecture examples demonstrate their value. Every scan of a package, every departure of a truck, and every GPS ping from a shipping container is a discrete event. An ItemScanned or VehicleDeparted event is published to a high throughput message bus like Apache Kafka. From there, numerous specialized services can subscribe to this stream of location and status updates.

- Real Time Tracking Service: Consumes location events (from IoT sensors, GPS) to update a live map for both internal logistics coordinators and external customers.

- Exception and Alerting Service: Listens for specific event patterns, such as a package sitting idle for too long (

NoMovementDetectedevent) or a deviation from its expected route (GeofenceBreachedevent), triggering automated alerts. - ETA Prediction Service: Consumes all transit events, feeding them into a machine learning model to continuously recalculate and refine the estimated time of arrival, emitting an

ETARecalculatedevent. - Warehouse Management Service: Listens for

ArrivingSoonevents to prepare for incoming inventory andDeliveredevents to finalize records.

This decoupled model, used by giants like FedEx and Maersk, creates a resilient and highly visible supply chain. If the ETA prediction service goes down for maintenance, packages are still tracked and delivered without interruption. Each component can be scaled independently to handle millions of events from a global network of sensors and scanners, providing a powerful, real time view of operations.

Strategic Takeaway: Treat your supply chain not as a linear process but as a continuous stream of events. This paradigm shift decouples real time visibility from the core physical movement of goods. By doing so, you can build responsive, intelligent systems that automatically detect anomalies, predict outcomes, and provide unparalleled transparency to customers without a single point of failure. Use event schemas like CloudEvents to standardize data from diverse sources like IoT devices and carrier APIs.

10. Reactive User Interface Updates

The event driven paradigm isn't just for backend services; it has fundamentally transformed modern user interfaces. Traditionally, keeping a UI in sync with server data required constant polling, an inefficient and slow process. A reactive UI flips this model on its head. Instead of the client constantly asking, "Is there anything new?", the server pushes updates only when data changes, creating a seamless, real time experience.

This approach is the magic behind collaborative tools where multiple users can see each other's changes instantly. When a user in a shared document types a character, an event like CharacterAdded is sent to the server, typically over a WebSocket. The server then broadcasts this event to all other connected clients. Each client's application state manager, like Redux or Vuex, listens for these events and updates the specific UI component without a full page reload.

- Server: Receives a

UserActionevent (e.g., cell edit, comment added) from one client via a persistent connection like a WebSocket. - Event Bus/Broadcaster: The server processes the action and publishes a

StateChangedevent to a topic or channel that all subscribed clients are listening to. - Client State Manager: The frontend application consumes the

StateChangedevent and updates its local state. - UI Component: A reactive framework like React or Vue automatically re renders only the component whose data has changed, ensuring a highly efficient and responsive update.

This architecture is the backbone of applications like Google Docs, Figma, and Slack, where instant collaboration and data synchronization are core to the user experience. By leveraging client side event listeners, these platforms provide a fluid, desktop like feel within a web browser, making them powerful examples of event driven architecture in action.

Strategic Takeaway: Extend event driven principles to the frontend to build dynamic, real time user experiences. Use WebSockets for persistent, low latency communication. Design your events to be granular, allowing for precise UI updates instead of costly full state reloads. This decouples the UI from the need to poll, reducing server load and creating a far more engaging and responsive application for your users.

Event Driven Architecture: 10 Use Case Comparison

| Use Case | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| E commerce Order Processing | High — distributed async flows, idempotency, event versioning | Moderate–High — message broker, databases, monitoring, scalable services | Decoupled services, scalable order throughput, eventual consistency | High volume online retail, marketplaces, multi tenant platforms | Independent scaling, fault isolation, easy extensibility |

| Real time Fraud Detection | Very High — low latency ML + rule engines, continuous tuning | High — stream processors, feature stores, low latency compute, model infra | Millisecond fraud blocking, reduced chargebacks, risk of false positives | Payments, banks, fintech, high risk transaction systems | Fast detection, adaptive models, immediate mitigation |

| Real time Analytics & Data Pipelines | High — multi stage pipelines, schema evolution, orchestration | High — Kafka/Kinesis, stream processors, storage, schema registry | Near real time BI, unified stream/batch analytics, improved decision making | Telemetry, BI dashboards, IoT analytics, product analytics | Low latency insights, flexible routing, many consumers supported |

| IoT Device Management & Monitoring | High — device heterogeneity, edge logic, security | High — MQTT/CoAP, edge gateways, scalable ingestion, device certs | Real time device visibility, predictive maintenance, automated actions | Industrial IoT, smart buildings, large sensor fleets | Massive scale handling, predictive maintenance, automation |

| User Activity Tracking & Personalization | Medium — event taxonomy, consent, ML pipelines | Moderate — event pipelines, analytics, recommendation engines | Personalized experiences, higher engagement and conversions | Media, e commerce, streaming, advertising platforms | Real time personalization, behavioral insights, improved conversion |

| Real time Notification & Alerting Systems | Medium — multi channel delivery, deduplication, scheduling | Moderate — messaging providers, templates, delivery tracking | Timely user notifications, improved engagement, delivery metrics | User alerts, marketing triggers, ops/incident notifications | Multi channel reach, preference management, immediate delivery |

| Workflow Orchestration & Automation | High — state machines, long running flows, compensation logic | Moderate–High — orchestration engine, persistence, observability | Automated multi step processes, audit trails, reduced manual work | Onboarding, approvals, claims processing, content moderation | Process visibility, automation, compliance friendly audits |

| Log Aggregation & Centralized Monitoring | Medium — collectors, indexing, query pipelines | High — storage, indexing engines, agents, retention policies | Faster troubleshooting, operational visibility, proactive alerts | SRE, ops monitoring, security incident response | Comprehensive visibility, root cause analysis, alerting |

| Supply Chain & Logistics Tracking | High — many integrations, geo events, regulatory complexity | High — GPS/IoT sensors, real time processing, integration layers | End to end visibility, predictive ETAs, exception detection | Shipping, fleet management, warehousing, global logistics | Real time tracking, proactive resolution, optimized routing |

| Reactive User Interface Updates | Medium — client side complexity, conflict resolution | Moderate — WebSocket/Realtime infra, client frameworks, pub/sub backends | Fluid UX, real time sync, collaborative features | Collaborative editors, live dashboards, chat, trading UIs | Reduced polling, responsive UI, consistent real time collaboration |

So, Should You Go All In on Events?

After exploring a decade of diverse event driven architecture examples, from real time fraud detection systems that protect revenue to slick, reactive UIs that delight users, a critical question emerges: is this the silver bullet for every engineering problem? The honest answer, as is often the case in complex systems design, is a resounding "it depends."

Adopting an event driven mindset is less about a single technology choice and more about a fundamental shift in how you view your system's data and logic. It's a move from a world of direct, synchronous requests to a world of asynchronous, observable facts. The e commerce order processing example showed us how this decoupling creates resilience; a payment service failure doesn't need to bring the entire checkout flow to a halt. Similarly, the Kafka powered analytics pipeline demonstrated how events can be a "source of truth" that multiple downstream consumers can tap into for different purposes, from business intelligence to machine learning.

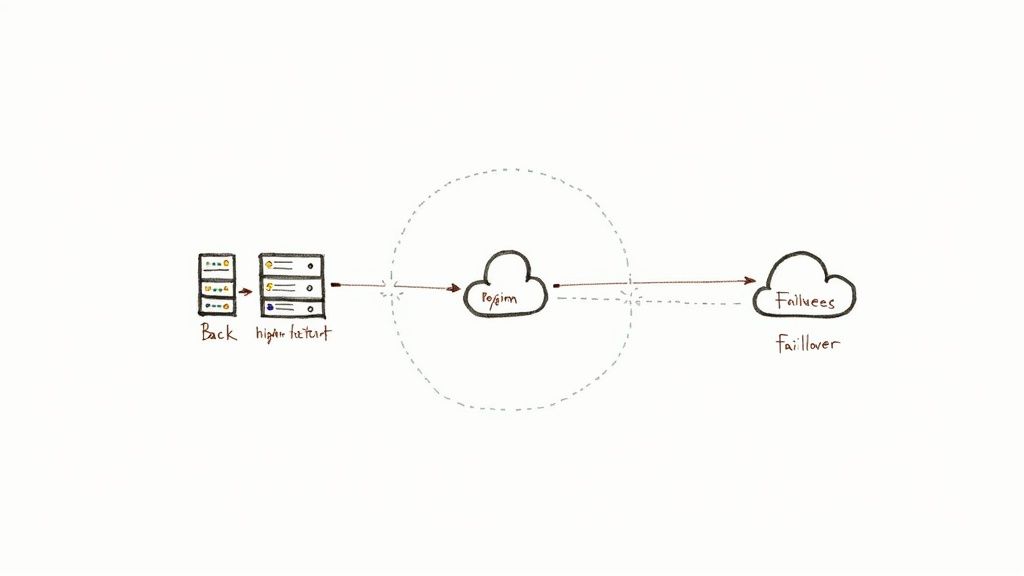

However, this power comes with its own set of challenges. We've seen that while you gain loose coupling, you trade it for eventual consistency, which can be a jarring transition for teams accustomed to immediate, transactional guarantees. Debugging a distributed system where a single user action triggers a cascade of events across multiple services is genuinely harder. As we discussed, robust observability, structured logging, and distributed tracing are not just nice to haves; they are table stakes for survival.

Your Strategic Takeaways and Next Steps

So, where do you go from here? The journey into event driven architecture isn't an all or nothing leap. It's a series of deliberate, strategic steps.

- Start Small and Isolate: Don't start by rewriting your core monolithic application. Identify a bounded context that is naturally asynchronous. A notification service, a user activity tracker, or a background processing pipeline are all excellent candidates to get your feet wet. Use this first project to build your team's muscle memory around brokers, idempotency, and asynchronous debugging.

- Embrace the Broker: The message broker (like RabbitMQ, Kafka, or AWS SQS/SNS) is the heart of your new architecture. Understand its specific guarantees. Does it promise at least once delivery? What about message ordering? Choosing the right broker for the job is paramount; the needs of a high throughput IoT data ingestion pipeline are vastly different from a simple task queue.

- Rethink Your Data Contracts: When services only communicate through events, the structure of those event messages becomes your API. These are your data contracts. Version them carefully. Have a clear plan for schema evolution, because a breaking change in an event producer can silently cripple multiple downstream consumers days later.

Key Insight: The most successful adoptions of event driven architecture happen incrementally. They begin at the edges of an existing system, proving their value in non critical workflows before being trusted with core business logic. This approach mitigates risk and allows the organization's operational skills to mature alongside the architecture.

Ultimately, mastering the patterns behind these event driven architecture examples is about adding a powerful set of tools to your engineering toolkit. It's about building systems that are not just scalable and performant, but also resilient, adaptable, and ready for the future. The initial learning curve is steep, but the payoff is a system that can evolve and grow with the complexity of your business, one event at a time. The world is asynchronous, and it's time our architectures reflected that reality.

Feeling overwhelmed by the tradeoffs or unsure where to start your event driven journey? As a consultant and technical mentor, I specialize in helping engineering leaders at startups and scale ups design, build, and audit production grade systems like the ones discussed here. If you need hands on guidance navigating these complex architectural decisions, you can learn more and get in touch at Kuldeep Pisda.

Become a subscriber receive the latest updates in your inbox.

Member discussion