Pairing Django and PostgreSQL isn't just a popular choice; it's a rite of passage for building web applications that are meant to last. I think of it as a strategic decision, pairing Django's wonderfully abstract Object Relational Mapper (ORM) with PostgreSQL's almost obsessive focus on data integrity and performance. This is the foundation you pour when you're building a skyscraper, not a garden shed.

Why Django and PostgreSQL Are Such Good Friends

You know how some tools just feel right together? That's Django and PostgreSQL. It's more than convenience; it's a partnership built on a shared philosophy of doing things the right way. They both care deeply about correctness, scalability, and frankly, our sanity as developers.

I learned this the hard way on one of my first big projects. We kicked things off with a simpler, file based database, thinking it would be quicker for prototyping. And it was... for about two weeks.

Then the client's requirements got more complex. We suddenly needed to handle concurrent writes, tricky joins, and specific data types our initial choice just choked on. The refactor was a nightmare, fueled by late nights migrating data and rewriting queries from scratch.

Making the switch to PostgreSQL felt like coming up for air. Suddenly, tasks that were a huge headache became straightforward. The entire system felt more reliable, and we could finally get back to building features instead of constantly fighting our database.

A Shared Philosophy of Excellence

So what makes this combination so effective? It really comes down to a few core principles they both champion. This isn't just about picking two popular tools; it's about creating a cohesive system where each part makes the other stronger. When you're trying to choose a technology stack, this kind of synergy is pure gold.

The Django community certainly agrees. Developer surveys consistently show an overwhelming preference for PostgreSQL. In fact, recent data shows that a staggering 76% of Django developers pick it as their go to database. It's not even a close contest.

Here's a breakdown of what makes them such a powerhouse duo:

- Data Integrity Above All: Both Django and PostgreSQL are obsessed with keeping your data safe and consistent. Django's ORM acts as a secure gateway to the database, while PostgreSQL provides bulletproof transaction support and strict data typing.

- Engineered for Growth: From day one, both are built to scale. Django's architecture is designed to grow with your application, and PostgreSQL is legendary for its ability to manage massive datasets and high concurrency workloads without breaking a sweat.

- Deep Integration and Advanced Features: The

contrib.postgresmodule in Django says it all. This isn't a bolt on solution; it's a deep, native integration that lets you tap directly into PostgreSQL's unique, powerful features likeJSONField,ArrayField, and full text search, all from your Python code.

Think of it like a master chef and a perfectly stocked pantry. Django is the chef, an expert at crafting complex applications. PostgreSQL is the pantry, filled with high quality, specialized ingredients that let the chef create virtually anything without compromise.

This guide is built on that powerful foundation. We're going to move past the simple "what" and get deep into the "how" and "why," showing you exactly how to set up, optimize, and really push the limits of this combination to build truly exceptional apps.

Setting Up Your Project for Success

Alright, let's get our hands dirty. A clean, correct initial setup is the single best investment you can make in a new project. It's like pouring the concrete foundation for a house; getting it right from the start prevents a world of pain later. I once lost half a day chasing a cryptic connection error only to find I had a typo in the database name. Never again.

This section is all about getting Django and PostgreSQL talking to each other smoothly and securely. We'll walk through the essentials: installing PostgreSQL, adding the necessary Python adapter, and then dialing in Django's settings.py file. This isn't just about making it work; it's about making it work well from day one.

If you're starting a new project from the ground up, our guide on starting a Django project without the headaches provides some great wider context.

The Essential First Steps

Before we can even think about Django, we need two key components in place. The first is PostgreSQL itself, and the second is the bridge that lets Python speak its language.

- Installing PostgreSQL: Your first job is to get a PostgreSQL server running. How you do this depends on your operating system. For macOS, Homebrew is your best friend (

brew install postgresql). On Linux, your package manager (apt-getoryum) is the way to go. Windows users can grab an installer directly from the official PostgreSQL website. - Installing the Python Adapter: Django doesn't talk to PostgreSQL natively. It uses a library called a "database adapter" to translate. The most common and recommended one is psycopg2. You can install it right into your project's virtual environment with a simple pip command:pip install psycopg2-binary

Usingpsycopg2-binaryis often the quickest way to get up and running. It comes with precompiled dependencies, which saves you from potential build headaches that can pop up when compiling from source.

Configuring Your Django Settings

Now for the main event: connecting your Django project. This all happens in your settings.py file, inside the DATABASES dictionary. Here's a breakdown of what a production grade setup looks like.

settings.py

import os

from dotenv import load_dotenv

load_dotenv() # Loads variables from .env file

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': os.getenv('DB_NAME'),

'USER': os.getenv('DB_USER'),

'PASSWORD': os.getenv('DB_PASSWORD'),

'HOST': os.getenv('DB_HOST', 'localhost'),

'PORT': os.getenv('DB_PORT', '5432'),

}

}

Let's pause and unpack this configuration, because every single line here is important.

ENGINE: This tells Django exactly which database backend to use. For us, it has to bedjango.db.backends.postgresql. No substitutes.NAME,USER,PASSWORD: These are your database credentials. Never, ever hardcode these directly in your settings file. This is a massive security risk. Instead, we use environment variables, loaded from a.envfile.HOSTandPORT: These specify where your database server is running. For local development,localhostand the default PostgreSQL port 5432 are usually correct.

Using environment variables is non negotiable for security. It keeps your secret keys, API credentials, and database passwords out of your version control history, preventing them from being accidentally exposed on GitHub. A simple tool like python-dotenv makes this dead simple to manage.As you get your project off the ground, it's also a great time to think about your deployment strategy, including whether you'll manage your own infrastructure. For those interested in a deeper dive, there's a fantastic guide on setting up your development or home server environment that covers the hardware and networking side of things.

With your settings configured correctly, you now have a clean, secure, and robust connection between Django and PostgreSQL, ready for you to start building.

This is where the magic really starts. The Django ORM is a brilliant piece of engineering on its own, abstracting away raw SQL and making database interactions feel perfectly Pythonic. But when you pair it with PostgreSQL, you move beyond simple queries and unlock a whole new dimension of functionality.

Your database stops being just a passive storage unit and becomes an active, intelligent partner in your application.

I remember the first time this clicked for me. I was building a system to track product variations, each with its own unique set of attributes. My initial thought was to create a messy web of related models. It felt clunky, over engineered, and just plain complicated. Then I discovered PostgreSQL's native support for structured data types, accessible directly through Django. It was a complete game changer.

Moving Beyond Basic Fields

Most of us start with the standard fields like CharField and IntegerField. These are the bread and butter of any application, and they get you far. But PostgreSQL offers specialized data types that can handle complex data far more efficiently than trying to shoehorn everything into a traditional relational model.

The visual below shows the simple, powerful hierarchy we're working with. PostgreSQL is the solid foundation, Psycopg2 is the essential connector, and Django's ORM is the brilliant application layer we interact with.

This elegant structure is what allows Django to tap directly into PostgreSQL's most advanced features, making what should be complex operations feel surprisingly simple.

Your New Favorite Model Fields

Thanks to the django.contrib.postgres module, you can use these powerful, PostgreSQL specific fields directly in your Django models. This is a huge win for both performance and code clarity. No more wrestling with complex joins for simple data structures.

Here are the big three you should get to know immediately:

ArrayField: This field lets you store a list of values—like strings or integers—directly in a single database column. Think tags on a blog post, a list of permissions for a user, or sensor readings from an IoT device. You can often say goodbye to that extraTagmodel and its join table.JSONField: This is arguably the most powerful of the bunch.JSONFieldlets you store arbitrary JSON objects, complete with nested data. It's perfect for unstructured or semi structured data like user settings, detailed product specifications, or API responses you need to cache. The best part? You can query inside the JSON structure directly from the ORM.HStoreField: A bit older but still very useful,HStoreFieldis for storing simple key value pairs where both keys and values are strings. It's a fantastic, lightweight alternative toJSONFieldwhen you just need a flat dictionary.

These fields aren't just for convenience. They allow PostgreSQL to index and query the contents of these structures efficiently, often outperforming complex relational joins for specific use cases. Your database becomes aware of your data's internal shape.

To give you a clearer picture, here's a quick look at how some of Django's advanced fields map to PostgreSQL's native types.

Django ORM Field Mapping to PostgreSQL Types

| Django Model Field | PostgreSQL Data Type | Common Use Case |

|---|---|---|

ArrayField |

array |

Storing a list of tags, permissions, or any simple collection without a separate model. |

JSONField |

jsonb |

Handling complex, nested, or unstructured data like user profiles or product specifications. |

HStoreField |

hstore |

Storing flat key value data, such as feature flags or simple metadata. |

RangeField |

range |

Representing a range of values, like a price range or a period of time. |

This tight integration means you're not just simulating these structures in Python; you're using the database's native, optimized data types.

A Practical Example: A Product Model

Let's make this real. Imagine we're building an e commerce platform and need a Product model. A product might have a list of tags for searching, various technical specifications, and other metadata.

Here's how we could model it using PostgreSQL's special fields:

models.py

from django.contrib.postgres.fields import ArrayField, JSONField

from django.db import models

class Product(models.Model):

name = models.CharField(max_length=255)

description = models.TextField()

# Store a list of search tags directly on the model

tags = ArrayField(

models.CharField(max_length=50),

blank=True,

default=list

)

# Store complex, nested product specifications

specs = JSONField(blank=True, default=dict)

def __str__(self):

return self.name

Look how clean and self contained that is. Without these fields, we would have needed a separate Tag model and a Spec model, likely connected with foreign keys or even a many to many relationship. For a deeper look at those, you can explore our guide on mastering the many to many relationship in Django. But for this specific use case, we've simplified our schema dramatically.

Now for the truly cool part: querying this data. We can filter products based on the contents of these fields.

Find all products tagged with 'electronics'

electronics = Product.objects.filter(tags__contains=['electronics'])

Find all products with a screen resolution of '1920x1080'

full_hd_products = Product.objects.filter(specs__screen__resolution='1920x1080')

This is the power of using Django and PostgreSQL together. You get the flexibility of schema less data structures with the transactional safety and robust querying of a world class relational database, all wrapped up in the beautiful Django ORM.

Tuning Your Application for Peak Performance

Let's get real for a moment: a slow app is a dead app. The simple, elegant queries that flew during development can suddenly grind your entire system to a halt as your user base and data grow. This is where we separate the hobby projects from production grade systems.

We're going to tackle performance optimization head on, focusing on the most common and impactful issues you'll face when pairing Django and PostgreSQL. The good news? Django gives us all the tools we need to solve these problems.

The Magic of Database Indexing

Imagine trying to find a topic in a massive textbook with no index. You'd have to scan every single page. That's a "full table scan," and it's exactly what your database does by default when you query a large table. It's painfully slow.

A database index is just like the index in that book. It's a special lookup table the database uses to find rows much, much faster. Instead of scanning the whole book, it just looks up the term in the index and jumps directly to the right page.

Django makes adding indexes incredibly simple right inside your model's Meta class.

models.py

from django.db import models

class Order(models.Model):

customer_name = models.CharField(max_length=100)

order_date = models.DateTimeField()

status = models.CharField(max_length=20)

class Meta:

indexes = [

models.Index(fields=['status']),

models.Index(fields=['order_date']),

]

By adding models.Index to the Meta.indexes list, we're telling PostgreSQL to build and maintain an efficient index on the status and order_date columns. Now, any queries filtering by these fields will be dramatically faster.

Proving It with EXPLAIN ANALYZE

You don't have to take my word for it. PostgreSQL gives us a powerful tool called EXPLAIN ANALYZE that shows exactly how it plans to execute a query and how long it actually takes. Running this on a query before and after adding an index is a real eye opener.

You'll see the query plan switch from a slow Seq Scan (that's our full table scan) to a lightning fast Index Scan. The performance difference can be orders of magnitude, turning a query that takes seconds into one that takes milliseconds.

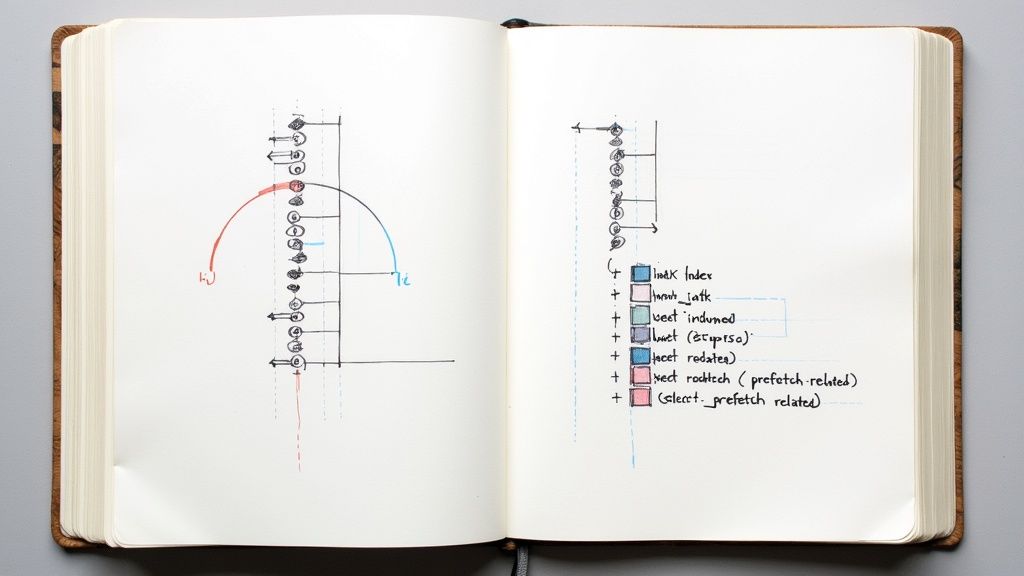

Slaying the N+1 Query Dragon

The most notorious performance monster in the ORM world is the "N+1 query problem." It's sneaky, dangerously easy to create by accident, and can bring your application to its knees. I once debugged a page that was taking over ten seconds to load. The culprit? It was making over 400 separate database queries because of this exact issue.

Here's how it happens. Imagine you want to display a list of blog posts and show the author's name for each one.

This is the N+1 problem in action!

posts = Post.objects.all() # Query 1: Get all posts

for post in posts:

print(post.author.name) # Query 2, 3, 4... N+1: Get author for EACH post

This code first makes one query to get all the posts. Then, inside the loop, it makes a new database query for every single post just to fetch its author. One hundred posts? That's one hundred and one queries. A performance disaster.

Luckily, Django provides two elegant solutions:

select_related: Perfect for foreign key and one to one relationships. It tells the ORM to fetch the related objects in the same database query using a SQL join.prefetch_related: The go to for many to many and reverse foreign key relationships. It works a bit differently by making a separate lookup for the related items and then joining them in Python.

Here's how we fix our broken code with a single line change.

The correct, high performance way

posts = Post.objects.select_related('author').all() # Just ONE query!

for post in posts:

print(post.author.name) # No new database hit here

Just like that, Django fetches all the posts and all their authors in a single, efficient database round trip. That page that took ten seconds to load? It was down to under 200 milliseconds after this fix. This isn't just a trick; it's a fundamental technique for any serious work with Django and PostgreSQL.

PostgreSQL's continued popularity really hinges on its power to handle these real world performance challenges. In fact, its adoption among Django developers has been on a steady climb. One recent survey showed its share increased by 2 percentage points, cementing its spot as the top database choice. This trend speaks volumes about PostgreSQL's ability to adapt to modern, data intensive workflows. You can discover more insights from the Django developer community on jetbrains.com.

Navigating Advanced Patterns and Common Pitfalls

Alright, you've got the basics down. Your app is talking to the database, the ORM is doing its thing, and your queries are reasonably snappy. But the real journey starts now—the one that takes an app from "it works" to "it's production grade." This is where we tackle the tricky stuff and sidestep the pitfalls that trip up even seasoned pros.

Think of this section as a collection of hard won lessons, the kind you typically learn from a late night production bug or a feature that craters under load. These are the insights that separate a merely functional app from a truly resilient one.

Mastering Database Migrations Safely

Migrations are one of Django's most celebrated features, but let's be honest: they can also be a source of pure terror. A migration that sails through on your local machine can crash and burn spectacularly in production, leaving your database in a messy, inconsistent state. I once pushed a migration that tried to rename a column on a massive table. It locked the table for several agonizing minutes during peak traffic. Painful lesson learned.

So, how do you handle migrations with the respect they command?

- Always Write Reversible Migrations: Make this your mantra. Every single migration should be reversible. Django's auto generated migrations are usually good about this, but if you're writing a custom

RunPythonmigration, you must provide a reverse function to undo its work. Without it, you can't roll back a failed deployment. - Handle Data Changes in Stages: If you need to migrate data as part of a schema change, break it into separate deployments. First, add the new field. Second, deploy and run a data migration to populate that new field. Finally, in a later deployment, run another migration to remove the old field. This multi step dance avoids locking your tables for long stretches.

- Test Against a Production Clone: Before you even think about running a risky migration on your live database, test it against a recent copy of production. It's the only way to know for sure how it will behave with a real world dataset.

Treat migrations like you treat your application code. They aren't just an afterthought; they are a critical part of your deployment that can bring your entire service down if you get it wrong.

Unleashing Full Text Search

As your application grows, simple icontains queries just don't cut it anymore. They become slow, clunky, and lack the sophistication users expect. This is where PostgreSQL's built in full text search engine becomes your secret weapon. It's blazingly fast, understands language specific stemming (so "running" matches "run"), and can even rank results by relevance.

Best of all, Django's django.contrib.postgres module gives you a beautiful ORM level integration. You can create a SearchVector across multiple fields and filter it with a SearchQuery.

For instance, building a powerful search for a blog app is surprisingly straightforward:

from django.contrib.postgres.search import SearchVector, SearchQuery

query = SearchQuery("python performance", search_type="websearch")

results = Post.objects.annotate(

search=SearchVector("title", "body"),

).filter(search=query)

That one ORM call translates into a highly optimized search operation right inside Postgres. You get search engine quality results without having to bolt on another service like Elasticsearch. This is a perfect example of how choosing Postgres pays off, unlocking advanced features that let you build more complex applications. In fact, this versatility is why Postgres is used for everything from web apps to geospatial analysis, as detailed in this research on PostgreSQL use cases.

The Silent Killer: Connection Limits

Finally, let's talk about something that bites a lot of people: connection pooling. Every time a web request hits your Django app, it needs a database connection. By default, Django opens a new connection for each request and closes it afterward. This is fine for a small site, but for a high traffic application, this constant opening and closing can completely overwhelm your database server, which can only handle a finite number of connections.

This is where a tool like PgBouncer becomes your best friend. It acts as a middleman, sitting between your Django application and your PostgreSQL database, managing a small pool of active connections. Your app just talks to PgBouncer, which instantly hands over a ready to use connection from its pool instead of making Postgres do all the work.

This simple change dramatically cuts down on overhead and stops you from hitting your server's connection limits, ensuring your application stays fast and responsive even when the traffic spikes.

Wrapping It All Up: Your Go To Plan for Future Projects

We've covered a ton of ground, from making that first connection between Django and PostgreSQL to fine tuning performance like a seasoned pro. Think of this as your personal cheat sheet—the key takeaways you'll want taped to your monitor the next time you fire up a new project.

Let's distill everything we've talked about into a clear, actionable roadmap. The goal here isn't just to recap; it's to give you a solid mental model for building high quality, production ready applications from day one.

Your Core Philosophy

This isn't just a random list of tips. It's a way of thinking about building better systems with this powerhouse duo.

- Think Like a Team: Treat Django and PostgreSQL as a single, unified system, not just two separate tools you've bolted together. Django's

contrib.postgresmodule is there for a reason—it's your bridge to unlocking Postgres's most potent features directly from your Python code. - Use the Right Tool for the Job: Before you start building a complex web of related models for something like tags or properties, take a step back and ask: "Could a

JSONFieldor anArrayFieldhandle this more cleanly?" Leaning on these native data types often simplifies your entire schema, cuts down on gnarly joins, and can even boost performance. - Performance is Not an Afterthought: Never just assume your queries are fast. Make

EXPLAIN ANALYZEyour best friend. Get into the habit of usingselect_relatedandprefetch_relatedproactively to squash the N+1 query problem before it ever has a chance to cripple your app in production. Indexing isn't something you do later; it's a fundamental part of a healthy schema. - Treat Migrations with Respect: Always write reversible migrations, especially when you're getting fancy with

RunPythonoperations. For any complex schema change that also involves shifting data around, plan on a multi stage deployment. Trust me, you don't want to be the one who locked a critical table during peak hours.

Ultimately, marrying Django and PostgreSQL gives you the best of both worlds. You get a rock solid relational foundation with the flexibility to handle the messy, complex data that modern applications demand. It's a stack that lets you start simple and scale up without ever hitting a brick wall.

With this foundation firmly in place, you're ready to tackle the next level: containerizing your app with Docker, setting up read replicas for high availability, or diving even deeper into full text search. Happy building.

Frequently Asked Questions About Django and PostgreSQL

We've covered a lot of ground, from the first pip install to fine tuning performance. But some questions always seem to find their way into developer forums or those late night debugging sessions. Let's clear the air on a few common sticking points.

Think of this as the Q&A part of the workshop, where we tackle the stuff that's probably on your mind.

When Should I Use SQLite Instead of PostgreSQL?

This is a classic, and for good reason. SQLite is absolutely brilliant for getting a project off the ground. I use it for almost all my initial prototyping because it's a simple file, needs zero setup, and works flawlessly with Django right out of the box.

But the moment your project needs to handle more than a handful of simultaneous users, you'll want to make the switch. If you need advanced features like JSONField or full text search, or if the project is heading to a live production server, it's time for PostgreSQL. The robustness and data integrity it provides under pressure are non negotiable for any serious application.

What Is the Biggest Mistake People Make with the Django ORM?

Without a doubt, the single most common and costly mistake is the N+1 query problem. It's a silent performance assassin that you often won't even notice during development when you're working with a small local database.

It happens when you fetch a list of objects, then loop through them and access a related object inside the loop. Each one of those accesses triggers a brand new database query.

This simple mistake can turn a single page load into hundreds, or even thousands, of database calls. Always be proactive. Useselect_relatedfor foreign key relationships andprefetch_relatedfor many to many. This isn't just an optimization; it's a fundamental necessity.

Do I Really Need a Connection Pooler like PgBouncer?

For small to medium sized apps, you might not feel the pain right away. Django's built in persistent connections can handle moderate traffic just fine, and it's easy to think you can put this off forever.

However, once your application starts seeing high traffic or a large number of concurrent users, a connection pooler like PgBouncer becomes absolutely essential. Opening and closing database connections is an expensive operation. A pooler keeps a "warm" set of connections ready, preventing your app from overwhelming the database by exhausting its connection limits. This is a critical piece of infrastructure for scaling gracefully and avoiding service crashing bottlenecks.

Building robust, scalable applications requires more than just good code; it demands thoughtful architecture and deep expertise. If your startup is looking to accelerate its roadmap with production grade systems, Kuldeep Pisda offers consulting services to help you strengthen your technical foundations. Learn more and get in touch at https://kdpisda.in.

Become a subscriber receive the latest updates in your inbox.

Member discussion