I once pushed a change that brought a production API to its knees. The culprit? A seemingly harmless database query that nobody caught in review. We have all been there: the endless back and forth, the vague 'please fix' comments, the anxiety of hitting 'merge'. Code review can feel like a chore, a bottleneck, or worse, a battleground. But what if it could be a team's greatest superpower for learning and building resilient systems?

That outage sent me on a journey. I became obsessed with understanding what separates a painful review process from one that actually elevates the code and the team. It's not about finding a rigid, one size fits all set of rules. It's about cultivating humane, practical code review best practices that fit real world teams, especially those navigating the complexities of Django, Next.js, and AI stacks. I learned that a high performing team's ability to ship faster often hinges on its review culture. For a broader perspective on modern code review strategies, you might find an external guide on 10 Best Practices for Code Review helpful as a complementary resource.

This guide moves beyond generic advice. We will explore ten specific practices that transformed my teams' workflows from dreaded obligations to moments of genuine collaboration and growth. We will cover everything from structuring the perfect pull request and automating tedious checks to providing feedback that builds up, rather than tears down, your colleagues. Let's dive in.

1. Keep Code Reviews Small and Focused

One of the most impactful code review best practices you can adopt is committing to small, focused changes. A pull request (PR) that alters thousands of lines across a dozen files is a recipe for reviewer fatigue and missed bugs. I've been on both sides of that monster PR, and trust me, nobody wins. The core idea is that a reviewer's ability to spot issues diminishes significantly as the size of the change increases. Limiting PRs to under 400 lines of code (a guideline championed by engineering teams at Google and SmartBear) forces both the author and the reviewer to concentrate on a single, well defined task.

This approach dramatically reduces the cognitive load on the reviewer. Instead of trying to hold an entire complex feature in their head, they can meticulously examine a smaller, self contained unit of work. This leads to higher quality feedback, faster review cycles, and a more agile development process. It encourages incremental progress and makes it easier to roll back changes if a problem arises.

How to Implement Small Reviews

Breaking down large tasks requires a strategic mindset and a bit of discipline. Here are some actionable tips to get started:

- Deconstruct Large Features: Before writing a single line of code for a new feature, break it down into the smallest possible logical chunks. For a new Django API endpoint, this might mean one PR for the model and migrations, another for the serializer and view, and a third for the URL configuration and tests.

- Leverage Feature Flags: For changes that are part of a larger, long running feature, use feature flags (or feature toggles). This allows you to merge incomplete or dependent code into the main branch safely, keeping it hidden from users until the entire feature is ready. This is a common practice in CI/CD environments.

- Separate Refactoring from Features: Avoid mixing a bug fix with a major refactor or a new feature. If you spot an opportunity to refactor code while working on a feature, create a separate branch and PR for the refactor. This keeps the review focused on a single objective.

- Track Review Size Metrics: Use tools available in GitHub, GitLab, or specialized engineering intelligence platforms to track the average size of pull requests. Set team goals to keep this number low and celebrate when you hit your targets.

2. Establish Clear Code Review Standards and Checklists

One of the quickest ways to introduce friction into a code review is ambiguity. When developers are unsure what to look for, reviews can devolve into subjective debates over stylistic preferences. Establishing clear standards and checklists is a powerful code review best practice that removes this uncertainty, ensuring every review is consistent, objective, and efficient. The goal is to create a shared understanding of what constitutes a "good" change, allowing reviewers to focus on critical logic and architecture rather than nitpicking code style.

By documenting expectations, you create a system of record that streamlines onboarding for new engineers and aligns the entire team on quality. This approach, championed by organizations like Google and Netflix, automates the easy decisions so human brainpower can be spent on complex problem solving. Instead of arguing about comma placement, reviewers can focus on substantive issues like security vulnerabilities, performance bottlenecks, or architectural integrity. This leads to higher quality code and a more collaborative, less adversarial review culture.

How to Implement Clear Standards

Building a comprehensive yet practical set of guidelines requires a collaborative effort. Here are actionable tips for creating standards that stick:

- Automate Style Enforcement: Leverage tools like Black for Python or Prettier for JavaScript to automate code formatting. This completely removes stylistic debates from the review process. The linter is the single source of truth, not a personal preference.

- Create Role Specific Checklists: A backend Django developer looks for different things than a frontend Next.js developer or a security engineer. Create tailored checklists for each role. For example, a backend checklist might include "Are database queries optimized?" while a frontend list might ask, "Does this component meet accessibility standards?".

- Integrate Checklists into Your Workflow: Don't let your checklists gather dust in a wiki. Use pull request templates in GitHub or GitLab to automatically include the relevant checklist in the PR description. This prompts both the author and the reviewer to verify that all standards have been met.

- Review and Evolve Your Standards: Your standards should be a living document. Set a recurring meeting, perhaps quarterly, for the engineering team to review and update the guidelines. This ensures they remain relevant as your technology stack, team, and best practices evolve.

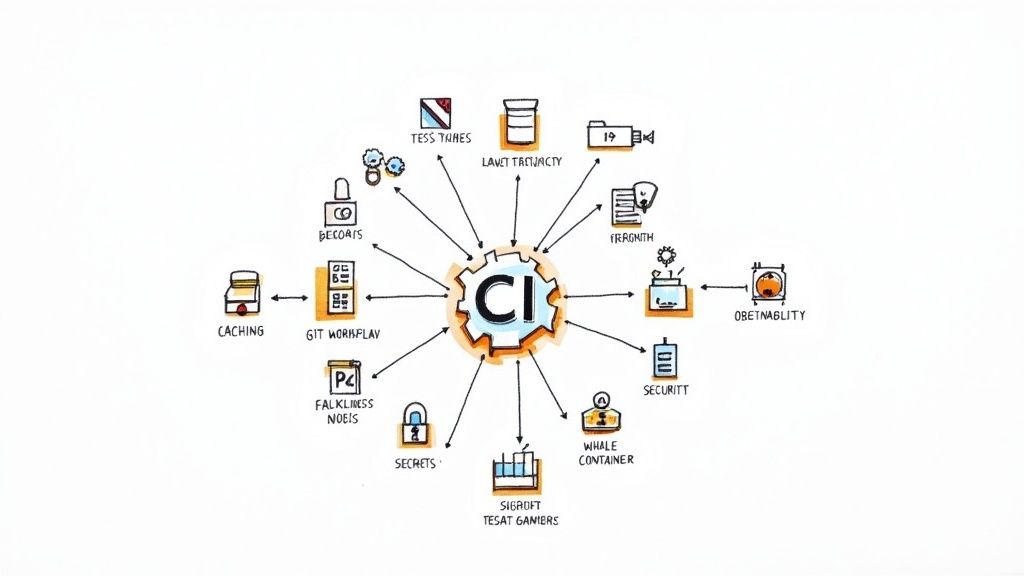

3. Automate What Can Be Automated

One of the most powerful code review best practices is to let machines handle the mundane work. Human review time is expensive and best spent on high level concerns like logic, architecture, and user experience, not debating code style or catching obvious syntax errors. Automating linting, formatting, testing, and security scanning frees up this crucial cognitive bandwidth, making reviews faster and more impactful.

By integrating automated checks directly into the development workflow, you shift the conversation from nitpicking to problem solving. Instead of a reviewer pointing out a missing semicolon, a CI/CD pipeline fails the build, forcing a fix before a human even sees the code. This practice, championed by the DevOps community, ensures a consistent quality baseline for every pull request and removes subjective style arguments from the review process.

How to Implement Automation

Setting up a robust automation pipeline creates a safety net that catches common issues early and consistently. Here's how to get started:

- Configure CI/CD Pipeline Checks: Use tools like GitHub Actions or GitLab CI to run your entire suite of checks on every pull request. This should include linting (e.g., with Flake8 for Python, ESLint for JavaScript), code formatting (Black, Prettier), and running all unit and integration tests. A failed check should block the PR from being merged.

- Use Pre Commit Hooks: Catch issues before they even reach the server. Pre commit hooks run checks locally every time a developer tries to make a commit. This is a common practice at companies like Meta to ensure code adheres to standards without waiting for a CI pipeline, creating a faster feedback loop.

- Integrate Static and Security Analysis: Add tools like SonarCloud or Snyk to your pipeline. These services scan for code smells, security vulnerabilities, and complex logic that could lead to bugs. Beyond standard linting and static analysis, the landscape of automation is rapidly expanding with tools like GitHub Copilot alternatives, which can assist in generating code and even flagging potential issues before human review.

- Automate Dependency Checks: Use tools like Dependabot or Renovate to automatically scan for outdated or vulnerable dependencies and create PRs to update them. This keeps your tech stack secure and current with minimal manual effort. Learn more about how automated testing complements these practices in our guide to Test Driven Development.

4. Require Multiple Reviewers and Diverse Perspectives

Relying on a single reviewer creates a single point of failure and limits the feedback a developer receives. A more robust approach, and a key code review best practice, is to mandate multiple reviewers for each pull request. This practice leverages the collective intelligence of the team, ensuring that code is scrutinized from various angles. By encouraging input from members with different expertise, such as a backend specialist, a frontend developer, and a security engineer, you significantly increase the chances of catching a wider range of issues, from subtle logic errors to potential vulnerabilities.

This multi reviewer model is a cornerstone of major open source projects like the Linux kernel and Kubernetes, as well as at tech giants like Google, where changes often require approval from a code owner plus at least one other engineer. The goal isn't to create bureaucracy; it's to foster collective code ownership and distribute knowledge across the team. When multiple people review a change, it breaks down information silos and helps junior developers learn by observing the thought processes of more senior team members. It transforms code review from a simple gatekeeping activity into a powerful tool for mentorship and team wide upskilling.

How to Implement a Multi Reviewer System

Setting up a multi reviewer workflow requires clear rules and automation to prevent it from slowing down your development cycle. Here's how to do it effectively:

- Use CODEOWNERS Files: Leverage the

CODEOWNERSfile in your repository (supported by GitHub, GitLab, and Bitbucket) to automatically assign required reviewers. You can specify individuals or entire teams as owners for specific file paths, ensuring the right experts always see the changes that affect their domain. - Implement Reviewer Rotation: To prevent review fatigue and distribute the workload evenly, implement a reviewer rotation schedule. This exposes all team members to different parts of the codebase and prevents any single person from becoming a bottleneck.

- Set Clear Turnaround Expectations: Define and communicate a Service Level Agreement (SLA) for review turnaround times, for instance, 24 hours. This keeps momentum high and ensures authors are not left waiting indefinitely for feedback.

- Establish a Fallback System: Designate fallback or secondary reviewers for critical code areas. If a primary owner is on vacation or unavailable, the PR can still move forward without compromising on quality or speed. This is crucial for maintaining velocity.

- Document Team Expertise: Maintain a simple, accessible document or wiki page that maps team members to their areas of expertise (e.g., "Alice: Django ORM performance," "Bob: Next.js state management"). This helps authors intelligently request reviews from the most relevant people, especially on complex PRs.

5. Focus on Intent and Design, Not Just Syntax

One of the most profound shifts an engineering team can make in its code review process is moving beyond surface level syntax checks. While linters and static analysis tools are excellent at catching typos and style violations, a human reviewer's unique value lies in understanding the why behind the code. An effective review assesses whether the chosen approach correctly solves the business problem, aligns with the existing architecture, and sets the project up for long term maintainability.

This practice elevates the code review from a simple bug hunt to a strategic design discussion. Instead of just asking "Does this code work?", reviewers should ask, "Is this the right way to solve the problem?". This perspective, championed by figures like Steve McConnell and institutionalized in Google's engineering culture, ensures that every pull request reinforces robust design patterns and architectural integrity. It prevents the accumulation of technical debt that arises from well written but poorly designed code.

How to Implement Design Focused Reviews

Shifting the focus from syntax to architectural intent requires a deliberate change in process and mindset. Here are several ways to embed this practice into your team's workflow:

- Ask "Why" Questions: Encourage reviewers to probe the author's reasoning. Questions like, "What was the reasoning for choosing this data structure over another?" or "How does this new service fit into our larger microservices architecture?" open up crucial design conversations that document intent.

- Require Design Docs for Major Changes: For significant features or architectural modifications, mandate a brief design document before implementation begins. This document, reviewed by senior engineers, ensures alignment on the high level approach, preventing wasted effort on code that will require a major rework.

- Summarize the Change: As a reviewer, start by trying to summarize the PR's purpose in your own words. If you can't, it is a strong signal that the code's intent is unclear or the PR description is insufficient. This forces clarity from both the author and reviewer. For example, if you're reviewing an API endpoint, you should be able to connect its logic back to the core principles of great API architecture. Explore our guide to REST API design principles to deepen your understanding.

- Separate Linting from Logic: Aggressively automate all style and syntax checks. The goal is to free up human brainpower to focus exclusively on logic, design, security, and performance. If a human is commenting on brace placement, your CI pipeline is not working hard enough.

6. Provide Constructive and Respectful Feedback

The technical quality of a code review is important, but its human element is what sustains a healthy engineering culture. One of the most critical code review best practices is to ensure all feedback is constructive, actionable, and delivered with respect. The goal is to critique the code, not the person who wrote it. This approach, centered on psychological safety, transforms reviews from a dreaded judgment into a collaborative learning opportunity, boosting team morale and preventing defensiveness.

A culture of respectful feedback, championed by communities like Rust and Django, recognizes that how a comment is phrased is as important as its technical content. When reviewers frame suggestions with empathy, it fosters an environment where developers feel safe to take risks, ask questions, and grow. This human centered approach is not just a "nice to have"; it is a strategic advantage for building resilient, high performing teams that innovate faster and retain talent longer.

How to Implement Respectful Feedback

Adopting a constructive feedback style requires conscious effort and consistent practice. Here are actionable tips to elevate your review comments:

- Start with Appreciation: Acknowledge the author's effort before diving into critiques. A simple "Thanks for putting this together!" or "I appreciate the detailed tests here" sets a positive tone for the entire review.

- Phrase Suggestions as Questions: Instead of issuing a command like "Change this to use a list comprehension," try a question: "Have you considered using a list comprehension here for conciseness?" This opens a dialogue rather than shutting it down.

- Avoid Absolute Language: Words like "always," "never," or "obviously" can come across as condescending. Frame feedback with nuance, recognizing that there are often multiple valid approaches to a problem.

- Offer Alternatives and Examples: Don't just point out a problem; provide a solution. The most helpful feedback often includes a clear code snippet demonstrating the suggested improvement. This makes the feedback concrete and easy to implement.

- Go Synchronous for Complex Issues: If a discussion becomes a long, back and forth thread, move it to a quick video call. Tone is often lost in text, and a synchronous conversation can resolve misunderstandings and find a path forward much faster.

7. Set Response Time Expectations and Reduce Reviewer Bottlenecks

A brilliant piece of code sitting in a review queue is blocked value. One of the most common frustrations in agile development is not the time it takes to write code, but the time it waits for review. Establishing clear Service Level Agreements (SLAs) for review turnaround is a crucial code review best practice that directly tackles this bottleneck, ensuring momentum isn't lost. The goal is to create a predictable, responsive review cycle that prevents developers from being blocked and context switching.

This practice, popularized by high velocity teams at Google and embedded in modern DevOps culture, recognizes that slow reviews are a major drag on productivity and morale. A typical and effective SLA is a 24 hour turnaround time for a first response on any pull request. This doesn't mean the PR must be approved within a day, but that the author receives meaningful feedback, signaling that the review process has started and their work is visible. This simple expectation transforms the review queue from a black hole into a predictable part of the development workflow.

How to Implement and Reduce Bottlenecks

Setting and maintaining response times requires a team wide commitment and the right systems. It's not about pressuring individuals, but about designing a process that makes prompt reviews the path of least resistance.

- Establish a Clear SLA: Formally agree on a review turnaround time, like the 24 hour first response rule. Document this in your team's engineering handbook and discuss it during onboarding. Adjust this SLA for urgent fixes or high priority features.

- Schedule Dedicated Review Time: Encourage engineers to block out specific "no meeting" times in their calendars purely for conducting code reviews. This proactive scheduling treats review work as a first class citizen, not an afterthought.

- Implement Smart Reviewer Assignment: Don't let reviews fall into a general queue. Use GitHub's

CODEOWNERSfile to automatically assign reviewers based on who owns that part of the codebase. For cross functional changes, tools like Slack bots can distribute review requests evenly to avoid overloading a single "go to" expert. - Track Key Metrics: You cannot improve what you do not measure. Use platform analytics to monitor metrics like "time to first review" and "time to approval". These data points highlight process bottlenecks and can inform discussions during retrospectives. Learn more about essential engineering productivity measurements to see how this fits into the bigger picture.

8. Document Context with Clear Commit Messages and PR Descriptions

Code that is hard to understand is often hard to review. One of the most critical yet frequently overlooked code review best practices is providing rich context through well crafted commit messages and pull request descriptions. Your code explains what it does, but the documentation surrounding it must explain the why. This narrative is essential for reviewers to understand your intent, evaluate the trade offs you made, and ensure the change aligns with the project's goals without needing a separate meeting.

This documentation serves a dual purpose. For the immediate reviewer, it's a roadmap to your thought process, making their job faster and their feedback more relevant. For the future developer (which might be you six months from now), it's an invaluable archaeological record, explaining the motivation behind a change long after the original context is forgotten. Great documentation transforms a code review from a simple syntax check into a meaningful architectural discussion.

How to Implement Better Documentation

Creating high quality context is a habit that pays dividends. It requires a disciplined approach to communicating the story behind your code. Here are some actionable strategies:

- Explain the 'Why,' Not Just the 'What': Your PR description should start by clearly stating the problem or user story. Instead of saying "Added caching to the user endpoint," explain "The user endpoint was experiencing high latency under load, impacting user experience. This PR introduces a Redis cache to reduce database queries and improve response times."

- Follow a Commit Message Convention: Adopt a standard like Conventional Commits. This format (e.g.,

feat:,fix:,refactor:) creates a machine readable history, simplifies changelog generation, and immediately tells reviewers the nature of the change. Google's practice of using the imperative mood (e.g., "Add feature" instead of "Added feature") is another powerful convention. - Use PR Templates: Enforce consistency by creating a pull request template in your repository (e.g., in a

.github/pull_request_template.mdfile). A template can prompt authors to include sections like "Problem," "Solution," "How to Test," and "Screenshots/Videos," ensuring no critical information is missed. This is similar to how robust API documentation guides consumers; find out more about crafting clear guidelines in our article on API documentation best practices. - Link to Tickets and Issues: Always reference the corresponding ticket or issue number from your project management tool (like Jira or Linear) in both the PR description and commit messages. This creates a traceable link between the business requirement and the code that implements it.

9. Separate Code Style Review from Logic Review

Few things drain energy from a code review faster than debates over semicolons, trailing commas, or line length. One of the most powerful code review best practices is to automate stylistic concerns and dedicate human brainpower exclusively to logic, architecture, and correctness. By separating code style from substantive review, you eliminate a whole class of subjective, low value feedback and focus on what truly matters: building a robust and functional product.

This approach acknowledges that human attention is a finite resource. When a reviewer is busy spotting inconsistent indentation or improper quote usage, they are less likely to catch a subtle off by one error or a flawed business logic implementation. Automating this "linting" layer with tools like Prettier, Black, or gofmt makes style a non issue. The code is either compliant or the CI/CD pipeline fails, removing the need for human intervention and making style adherence an objective, non negotiable standard.

How to Implement Style and Logic Separation

Integrating automated style enforcement into your workflow is a game changer for team harmony and efficiency. Here is how to make it happen:

- Mandate Auto Formatters: Adopt a strong, opinionated code formatter for each language in your stack. For a Next.js frontend, this is Prettier. For a Django backend, it is Black. These tools eliminate debate by providing one official style. Make running the formatter a pre commit hook so code is always formatted before it is even pushed.

- Configure CI to Enforce Style: Your CI pipeline should have a dedicated step that checks for formatting and linting errors. Configure it to fail the build if the code does not adhere to the established rules. This provides an unemotional, automated gatekeeper for code quality.

- Establish a "No Style Comments" Rule: Explicitly agree as a team that stylistic feedback is not welcome in pull request comments. If a reviewer notices a style issue that slipped past the automation, the correct response is to improve the automation, not to leave a manual comment.

- Document What is Automated: Keep a simple document in your repository that lists the tools used (e.g., Black, flake8, ESLint) and a brief summary of their purpose. This helps new team members understand which types of feedback are handled automatically and which require human attention.

10. Learn from Code Reviews and Continuously Improve Process

A static code review process is a stale process. One of the most mature code review best practices is to treat the review cycle itself as a product that requires continuous improvement. The goal isn't just to catch bugs in a single pull request; it's to create a self reinforcing system where each review makes the team, the code, and the process itself incrementally better. This means actively learning from what happens during reviews and using that data to iterate.

Adopting this mindset transforms code reviews from a simple quality gate into a powerful engine for team growth and knowledge sharing. By systematically analyzing review data and gathering qualitative feedback, teams can identify bottlenecks, spot recurring issues (like common security flaws or performance oversights), and refine their guidelines. This approach, championed by data driven engineering cultures at Google and Microsoft, ensures your review practices evolve with your team's needs and technological stack, preventing process rot and keeping developers engaged.

How to Implement a Learning Process

Creating a feedback loop for your code review process requires intentional effort and the right tools. Here's how to establish a culture of continuous improvement:

- Establish Key Metrics: Track quantitative data to get an objective view of your process. Focus on metrics like review time (time from PR creation to merge), rework rate (how much code is changed after the first review), and the defect escape rate (bugs found in production that should have been caught in review). Tools within GitHub and GitLab provide some of these insights.

- Hold Regular Retrospectives: Dedicate time during your team's monthly or quarterly retrospectives to specifically discuss the code review process. Ask questions like, "What went well in our reviews this month?" and "Where did our process cause friction or frustration?"

- Survey Your Developers: Anonymously survey engineers quarterly to gauge their satisfaction with the review process. Ask about the quality of feedback they receive, the perceived fairness of the workload, and whether they feel the process helps them grow. Use this qualitative data to complement your metrics.

- Share Learnings and Patterns: When a particularly insightful comment or a great catch happens in a review, share it in a team channel or document. Create case studies of critical bugs caught in review to reinforce the value of the process and celebrate those successes. Adjust guidelines based on recurring anti patterns discovered.

Top 10 Code Review Best Practices Comparison

| Practice | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Keep Code Reviews Small and Focused | Low to Moderate — process discipline and PR sizing | Minimal tooling; developer discipline; more frequent reviews | Faster reviews, higher defect detection, fewer merge conflicts | High velocity teams, frequent incremental work | Faster feedback; easier comprehension; reduced conflicts |

| Establish Clear Code Review Standards and Checklists | Moderate — documentation and enforcement | Time to document; linters/formatters; periodic maintenance | Consistent reviews; fewer style disputes; predictable quality | Large/distributed teams; onboarding new contributors | Predictability; faster reviews; smoother onboarding |

| Automate What Can Be Automated | Moderate to High — CI and tool integration | CI/CD, linting/static analysis, maintenance and possible licensing costs | Early detection of style/bugs; reduced manual nitpicks | Mature DevOps teams; frequent PRs; security sensitive code | Consistency; early bug/security detection; reduced manual work |

| Require Multiple Reviewers and Diverse Perspectives | Moderate — policy and reviewer assignment | More reviewer time; sufficient team size; coordination overhead | Higher defect detection; broader knowledge sharing | Critical systems; complex or cross cutting changes | Diverse perspectives; shared ownership; fewer blind spots |

| Focus on Intent and Design, Not Just Syntax | High — cultural change and reviewer expertise | Senior reviewers; longer review time; design docs | Better architecture, fewer long term defects, reduced technical debt | Architectural decisions; long lived systems; complex domains | Improved design quality; long term maintainability |

| Provide Constructive and Respectful Feedback | Low to Moderate — cultural norms and training | Training, examples, moderation; ongoing coaching | Improved morale, reduced defensiveness, more learning | Teams with juniors; remote or cross cultural teams | Psychological safety; stronger collaboration; developer growth |

| Set Response Time Expectations and Reduce Reviewer Bottlenecks | Moderate — SLAs and workflow changes | Staffing or rotation; auto assignment tools; metrics tracking | Faster turnaround, maintained momentum, fewer stalled PRs | Time sensitive projects; agile teams needing steady velocity | Maintains velocity; reduces WIP; predictable SLAs |

| Document Context with Clear Commit Messages and PR Descriptions | Low — templates and discipline | PR/commit templates; small extra author time | Faster reviewer understanding; fewer clarifying questions | Distributed teams; complex features or regulated projects | Better context for reviewers and future maintainers |

| Separate Code Style Review from Logic Review | Moderate — tooling plus policy | Formatters/linters in CI; pre commit hooks; config maintenance | Focused human reviews on logic; fewer style arguments | Teams using autoformatters; large codebases | Faster logic review; consistent style enforced automatically |

| Learn from Code Reviews and Continuously Improve Process | Moderate to High — metrics and retrospectives | Analytics tools, time for retrospectives, data analysis | Process improvements, data driven decisions, higher satisfaction | Organizations scaling practices; improving engineering maturity | Continuous improvement; reduced systemic issues; informed changes |

Your Next Pull Request Starts Here

We have journeyed through the intricate landscape of effective code reviews, moving from the foundational principle of keeping pull requests small and focused to the cultural imperative of continuous learning. Along the way, we've explored the power of automation, the necessity of clear checklists, the art of constructive feedback, and the strategic value of diverse reviewer perspectives. The path from a chaotic, bottlenecked process to a streamlined, collaborative one is paved not with a single silver bullet, but with a series of deliberate, thoughtful improvements.

The core lesson is this: an exceptional code review process is a cultural artifact, not just a technical workflow. It is a reflection of a team's commitment to shared ownership, psychological safety, and collective growth. Tools like linters, CI pipelines, and static analysis are powerful allies, but they only amplify the underlying human system. Without a foundation of respect and a shared goal of building better software together, even the most sophisticated automation will fall short. The code review best practices we've discussed are designed to nurture that very culture.

From Theory to Actionable Change

Reading about best practices is one thing; implementing them is another. The sheer volume of advice can feel overwhelming, leading to analysis paralysis. The key is to avoid the "all or nothing" trap. You do not need to overhaul your entire process overnight. Instead, view these ten principles as a menu of options, not a rigid prescription.

Your first step is to diagnose your team's most significant pain point.

- Is review feedback inconsistent or causing friction? Start by implementing a clear checklist (Practice #2) and focusing on constructive, respectful feedback templates (Practice #6).

- Are reviews taking too long and blocking development? Champion the discipline of small, atomic pull requests (Practice #1) and set clear expectations for response times (Practice #7).

- Are reviewers getting bogged down in stylistic debates? Leverage automation by enforcing a strict linter and code formatter in your CI pipeline, effectively separating style from logic review (Practice #9).

Pick one, maybe two, of these strategies and commit to them for a few sprints. Treat it as an experiment. Gather feedback from the team: What's working? What isn't? What feels better? This iterative approach transforms the daunting task of "improving code reviews" into a series of small, manageable wins. Each small victory builds momentum, making the next improvement easier to adopt.

The Lasting Impact of a Refined Process

Mastering these code review best practices transcends the immediate benefit of catching bugs. It is an investment that pays compound interest across your entire engineering organization. It accelerates developer onboarding, as new hires learn the codebase and its standards through structured feedback. It distributes knowledge, breaking down information silos and ensuring no single person is a single point of failure. Most importantly, it fosters an environment where engineers feel empowered to experiment, to learn from mistakes, and to hold each other to a high standard of excellence.

Your next pull request is more than just a collection of code changes. It is an opportunity to practice empathy, to share knowledge, and to contribute to a culture of quality. It's a chance to be the kind of reviewer you've always wanted to have, and the kind of author who makes a reviewer's job a delight. The journey to a world class engineering culture starts right there, in the comments of your next review.

Building a high performing engineering culture goes beyond just code. If you are looking to scale your team, refine your technical strategy, or need an experienced hand to guide your architecture, consider reaching out to Kuldeep Pisda. We specialize in providing CTO as a Service and hands on consulting to help startups and scale ups like yours implement these very practices and build robust, maintainable systems.

Become a subscriber receive the latest updates in your inbox.

Member discussion