We’ve all been there. It’s 10 PM on a Tuesday, and an alert screams from your monitoring dashboard. A critical feature, one that was working perfectly just hours ago, has suddenly broken in production.

The pressure mounts with every passing minute as you dive into the codebase, frantically trying to pinpoint the source of the chaos. Every change you consider feels like a massive gamble. Did your last deployment cause this? Was it an obscure edge case nobody thought of? Fixing one thing might silently break three others. I once spent an entire afternoon chasing a bug like this, only to find a single misplaced comma. It's maddening.

This feeling is what I call ‘code fragility’—where the system is so interconnected and brittle that even minor adjustments can have catastrophic, unforeseen consequences. You start to lose confidence in your own code.

Caption: That feeling when production is on fire and you're just trying to find the right log file.

The Problem With Testing Last

The standard response to this anxiety is always, "We need more tests." So we write them, but often as an afterthought. We bolt on a suite of tests after the feature is already built, trying to cover all the paths we can remember. While this is better than nothing, it rarely builds true confidence.

This reactive approach to testing inevitably leaves gaps. You end up with a safety net full of holes, which leads to several all too common pain points:

- Regression Bugs: New features frequently break old ones. That late night bug hunt becomes a recurring nightmare.

- Unclear Intent: Without tests written first, the code's intended behavior can become ambiguous, making it a headache for new team members to get up to speed.

- Fear of Refactoring: The codebase grows rigid and difficult to improve because developers are terrified to touch anything for fear of breaking it.

"The problem isn't a lack of tests. The problem is that the tests are treated as a chore, a final checkbox to tick before deployment, rather than as a fundamental part of the design process."

This is precisely the painful, high stakes scenario that forces us to ask a better question. What if, instead of using tests to confirm what we've already built, we used them to guide what we are about to build? What if they could prevent the fire instead of just helping us put it out?

This question is at the heart of what test driven development aims to solve.

Flipping The Script With Test Driven Development

Instead of treating tests as a final, often rushed, step in the development process, what if we flipped the script entirely? This is the whole idea behind Test Driven Development (TDD), a discipline that asks you to write a test before you write a single line of the actual code.

Think of it like an architect designing a building. They don't just start laying bricks and hope for the best. First, they create a detailed blueprint that defines every single requirement: where the walls go, how strong the foundation must be, and where the windows will sit. In the world of TDD, your test is that blueprint. It’s a precise, executable specification for the feature you're about to build.

This isn't some new fad. TDD's roots go back to the early 2000s, where it was championed by Kent Beck as a cornerstone of Extreme Programming (XP). Even early on, research showed that developers using TDD wrote significantly more tests, and this discipline led to higher productivity, especially on brand new projects.

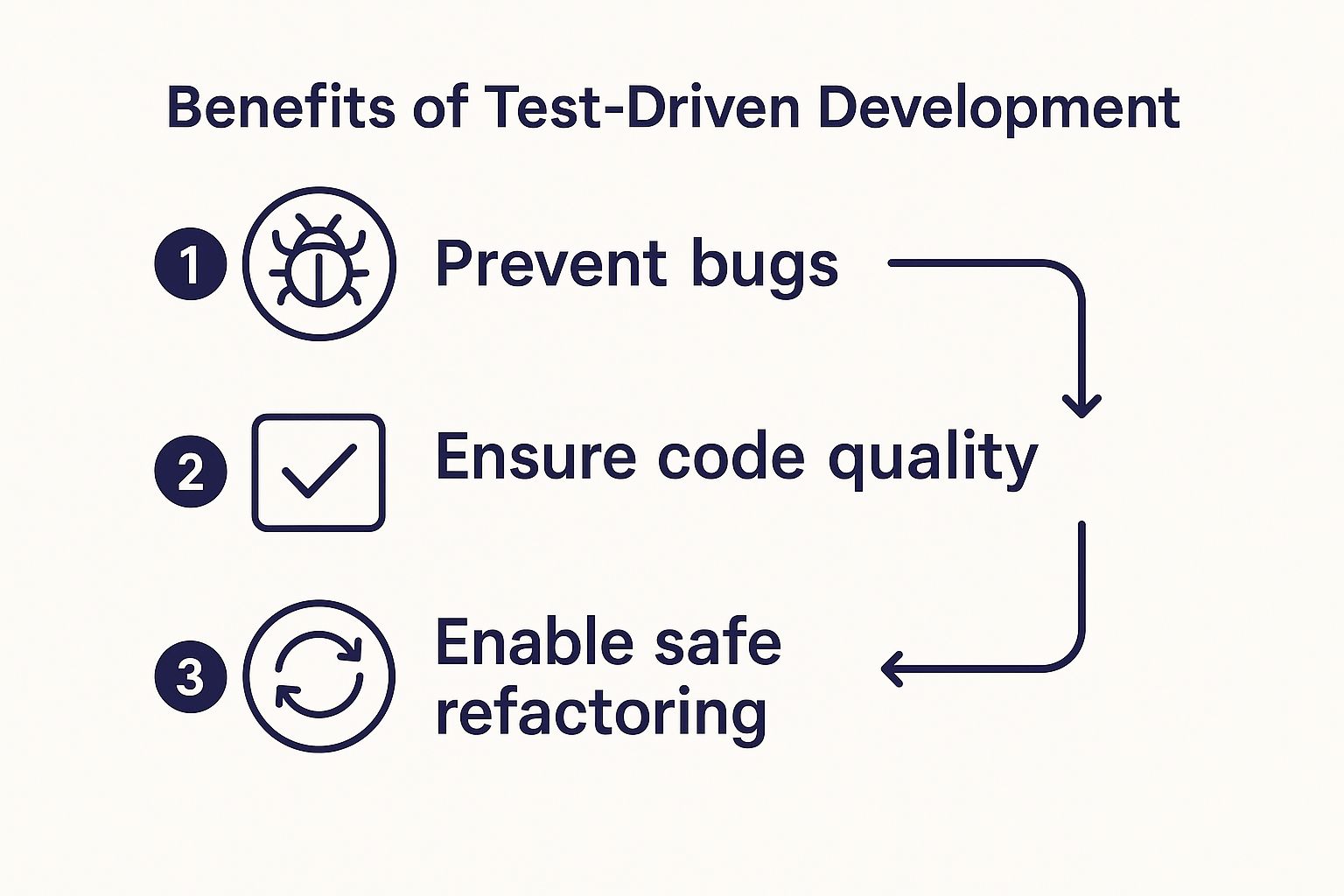

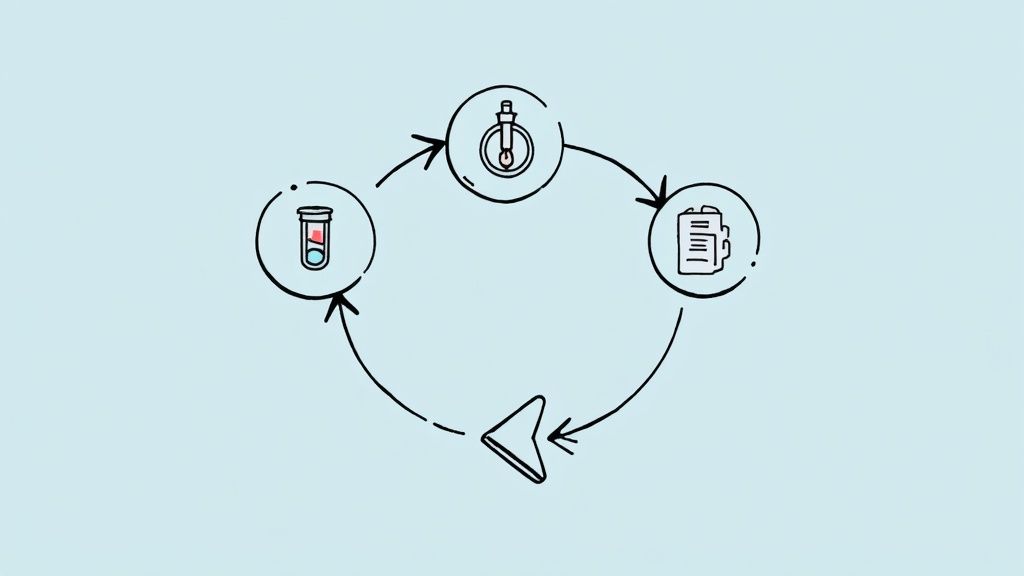

The Red Green Refactor Mantra

The entire TDD process is guided by a simple but powerful loop: Red, Green, Refactor. This isn't just a list of steps; it's a rhythm that brings discipline and predictability to your coding sessions.

- Red (Write a Failing Test): You start by writing a test for a piece of functionality that doesn't even exist yet. This test must fail—that's the point. A failing test proves that your test works and that the feature is genuinely missing.

- Green (Write Just Enough Code to Pass): Your next and only goal is to make that test pass. You do this as quickly and simply as you can. We're not aiming for elegant, perfect code here. The objective is to write the absolute minimum amount of code required to turn that red test green.

- Refactor (Clean Up Your Code): With a passing test now acting as a safety net, you can improve the code you just wrote. You can remove duplication, make variable names clearer, or rethink the structure entirely, all with the confidence that if you break something, your test will immediately fail and tell you.

This cycle creates an incredibly tight feedback loop. Each phase builds upon the last, creating a reinforcing system where quality isn’t an afterthought but a prerequisite for moving forward.

This simple workflow helps prevent bugs from ever taking root and allows you to safely improve your code over time without fear.

Let's pause and reflect for a moment. Before we dive into an example, here is what this cycle looks like in a table. Each step has a clear purpose that contributes to the next, forming a continuous cycle of quality.

The TDD Cycle Explained

| Phase | Goal | Developer Action |

|---|---|---|

| Red | Define the requirement | Write a single, small test for functionality that doesn't exist. Run it and watch it fail. |

| Green | Fulfill the requirement | Write the most straightforward code possible to make the failing test pass. No more, no less. |

| Refactor | Improve the implementation | Clean up the code you just wrote, removing duplication and improving its design, all while keeping the test green. |

Once the refactoring is done and the test is still passing, you're ready to start the cycle all over again with the next small piece of functionality.

The "aha" moment with TDD comes when you realize it’s not primarily a testing technique—it’s a design technique. It forces you to think about how your code will be used before you even write it.

This shift in mindset is a game changer. It naturally aligns with essential software design best practices like SOLID and DRY, which are critical for building robust applications.

By focusing on one small, testable piece of behavior at a time, you can't help but create code that is more modular, decoupled, and easier to understand. You're building with intention, not just reacting to a list of requirements. The result isn't just a suite of tests, but a better designed, more maintainable system built one small, confident step at a time.

A Practical TDD Example in Django

Theory is great, but TDD doesn't truly click until you get your hands dirty. Let's step away from the concepts for a moment and actually build a small, practical feature in a Django app. This will give you a real feel for that Red Green Refactor rhythm.

We'll work from a simple user story:

As a user, I want to see the five most recently published articles on the blog homepage.

This is a classic feature for any content site. It’s simple enough to follow along easily but complex enough—touching the model, view, and URL—to perfectly demonstrate the TDD cycle. We’ll walk through each step, starting with a test that fails, writing just enough code to make it pass, and then cleaning it all up.

Step 1: Red — Writing The First Failing Test

Before we even think about models or views, our first job is to write a test that describes what we want to achieve. We need a test case that confirms a request to the homepage returns a 200 OK status and, crucially, contains the five most recent articles in its context.

Let's assume we have a basic Article model with a title and a published_at field. We'll pop open a test file, say tests/test_views.py, and lay down our first test.

# blog/tests/test_views.py

from django.test import TestCase

from django.utils import timezone

from blog.models import Article

class HomepageViewTest(TestCase):

def test_displays_five_most_recent_articles(self):

# Arrange: Create 6 articles to make sure we only get the latest 5.

for i in range(6):

Article.objects.create(

title=f'Article {i}',

published_at=timezone.now()

)

# Act: Make a request to the homepage.

response = self.client.get('/')

# Assert: Check the response.

self.assertEqual(response.status_code, 200)

self.assertContains(response, 'Article 5') # The newest one

self.assertNotContains(response, 'Article 0') # The oldest one

self.assertEqual(len(response.context['articles']), 5)

If we run this test right now, it will fail spectacularly. Django will most likely throw a Resolver404 error because the URL / doesn't even exist yet. This is perfect. Our test has failed for exactly the right reason, proving that the functionality is missing.

We are officially in the Red phase.

Step 2: Green — Making The Test Pass

Our mission now is simple: write the absolute minimum amount of code required to make this test pass. Nothing more. We're not aiming for beautiful, optimized code; we just want to see that satisfying green checkmark.

First, let's create the URL route.

# myproject/urls.py

from django.urls import path

from blog.views import homepage_view

urlpatterns = [

path('', homepage_view, name='homepage'),

]

Next, we need the simplest possible view. It will query for the articles, order them, and pass them into a template.

# blog/views.py

from django.shortcuts import render

from .models import Article

def homepage_view(request):

articles = Article.objects.order_by('-published_at')[:5]

return render(request, 'homepage.html', {'articles': articles})

Finally, we need a bare bones template to render the article titles.

<!-- templates/homepage.html -->

<html>

<body>

<h1>Blog Homepage</h1>

<ul>

{% for article in articles %}

<li>{{ article.title }}</li>

{% endfor %}

</ul>

</body>

</html>

With these pieces in place, we run our test again. This time, it passes. The URL resolves, the view fetches the right data, the template renders it, and all our assertions hold true. We have successfully reached the Green phase.

Step 3: Refactor — Improving The Code

Now that our test is green, we have a safety net. This is where we can confidently look back at the code we just wrote and ask, "Can this be better?" The refactor phase is all about improving clarity, removing duplication, and enhancing the design without changing the functionality. Our test will scream at us immediately if we break anything.

Looking at our homepage_view, the logic is pretty simple. But what if other parts of our app also need to grab the most recent articles? We can extract this query into a custom manager on the Article model. This move makes the logic reusable and our view even cleaner.

First, let's create that custom manager.

# blog/models.py

from django.db import models

from django.utils import timezone

class ArticleManager(models.Manager):

def recent(self, count=5):

return self.get_queryset().order_by('-published_at')[:count]

class Article(models.Model):

title = models.CharField(max_length=200)

published_at = models.DateTimeField(default=timezone.now)

objects = ArticleManager() # Assign our custom manager

With the manager in place, our view becomes much more expressive and readable.

# blog/views.py

from django.shortcuts import render

from .models import Article

def homepage_view(request):

articles = Article.objects.recent()

return render(request, 'homepage.html', {'articles': articles})

The view now clearly communicates its intent: "get the recent articles." The implementation details of what "recent" actually means are neatly tucked away in the model manager where they belong. After making this change, we run our tests one more time. Still green.

This confirms our refactoring was a success. We've completed the Refactor phase, and the cycle is ready to begin all over again for the next feature.

This deliberate process ensures that every piece of logic is backed by a test from the moment it’s created. Of course, properly structuring your application is also key. For a deeper dive on that topic, you can explore our guide on how to structure a Django project for more best practices.

The Real World Payoff of Adopting TDD

After running through the Red Green Refactor cycle a few times, the big question always pops up: "Is all this extra effort actually worth it?" I get it. At first, TDD feels slower, like you're taking two steps forward and one step back. But the payoff isn't just a nice to have; it fundamentally changes how you build and maintain software for the better.

The most immediate win? A dramatic drop in those late night bug hunts we all dread. Since every single piece of logic is born from a test that defines its correct behavior, you're building a comprehensive safety net from day one. This isn't just about catching errors; it's about preventing a whole class of bugs from ever being written in the first place.

A Safety Net For Your Future Self

Think of your test suite as a gift to the person you'll be six months from now. When you need to refactor a complex piece of business logic or add a new feature, you won't have to hold your breath and just hope for the best. Instead, you can make changes with total confidence, knowing that if you accidentally break something, a test will fail immediately and tell you exactly what went wrong.

This confidence completely transforms your relationship with the codebase. Refactoring stops being a risky, terrifying chore and becomes a normal, safe part of your daily workflow. The code stays clean and adaptable instead of slowly calcifying into a rigid, unchangeable monolith. It's the secret to keeping technical debt at bay.

This is especially critical when you're building APIs. A robust test suite ensures your contracts are always met, which is a core part of learning how to make fail safe APIs in Django.

TDD isn't about being a perfect developer. It’s about creating a system where you don’t have to be perfect to write great, reliable code. Your tests have your back.

There's a reason TDD is so closely tied to Agile software development. Many teams see a massive reduction in post release defect rates—sometimes by as much as 40% to 90%—because the code is so thoroughly validated before it ever sees the light of day.

From Ambiguity to Living Documentation

Here's another powerful side effect: your tests become a form of living, executable documentation. A new developer joining the team doesn't have to guess what some mysterious function does. They can just look at its tests and see precisely what it's expected to do, what inputs it handles, and what edge cases it covers.

This clarity actually starts much earlier, during the design process. You simply can't write a test for something if you don't know exactly what it's supposed to do. This forces you to think through the requirements with incredible precision before writing a single line of implementation code.

Ultimately, TDD is a key part of streamlining software development because it drives higher quality outcomes from the very beginning.

To really see the difference, let's compare the two approaches side by side.

Traditional Development vs. Test Driven Development

The table below breaks down the fundamental differences in workflow and mindset between the "test after" and "test first" approaches.

| Aspect | Traditional Development (Tests After) | Test Driven Development (Tests First) |

|---|---|---|

| Design Focus | Focused on implementation first, which often leads to code that is hard to test. | Focused on the interface and behavior first, leading to more modular, decoupled code. |

| Bug Discovery | Bugs are typically found late in the cycle—during QA or, even worse, in production. | Bugs are caught instantly, the moment a test fails during development. |

| Confidence | Low confidence in making changes, with a high fear of causing regressions. | High confidence in refactoring and adding new features. The test suite is your safety net. |

| Documentation | Documentation is a separate, manual task that quickly becomes outdated. | The test suite serves as up to date, executable documentation of the system's behavior. |

The contrast is pretty stark. While the initial investment in writing tests first might feel like a slowdown, the long term gains in maintainability, code quality, and developer sanity are immense. It’s the difference between building a house on a solid foundation versus building it on sand and just hoping it doesn’t collapse.

Pitfalls on the TDD Journey

It’s easy to get sold on the dream of clean code and the iron clad safety net TDD promises. But let's get real for a second: flipping the switch to TDD isn't an overnight change. There’s a genuine learning curve, and I’ve seen plenty of developers hit a wall, get frustrated, and walk away convinced it’s just not for them.

The first hurdle is almost always the feeling that you're suddenly coding in slow motion. If you're used to diving headfirst into writing the implementation, the deliberate "test first" rhythm feels like you’ve got the handbrake on. Writing a test, watching it fail, and then writing just enough code to make it pass can feel counterintuitive and painstakingly slow. It’s a total mental shift from "How do I build this?" to "How do I describe what this thing is supposed to do?"

This initial speed bump is a huge reason why TDD adoption isn't universal. Despite the obvious upsides, recent data suggests only about 20% to 25% of software engineers use it regularly. Most of the time, it's this learning curve and the pressure to ship quickly that gets in the way. If you're curious about the numbers, you can find more insights on TDD adoption rates and the factors behind them on cosn.io.

The Art Of Writing Good Tests

Once you get past the change of pace, the next mountain to climb is learning to write good tests. It’s one thing to test a simple function that adds two numbers, but it's another thing entirely when you’re wrestling with the messy reality of a real world application.

This is where a lot of people get stuck. The common pain points usually boil down to a few things:

- Complex Dependencies: How on earth do you test a piece of code that needs to talk to a database, call an external API, or rely on some third party service? Writing tests for these can feel like a nightmare.

- Brittle Tests: You write a test that’s welded to the implementation details. The moment you refactor the code—even if the functionality is identical—the test shatters. This is maddening and completely defeats the purpose.

- Testing The Wrong Thing: It’s so easy to fall into the trap of testing how your code works instead of what it does. A good test shouldn’t care about the internal mechanics, only the final, observable behavior.

Practical Tips For Overcoming The Hurdles

So, how do you get through this phase without throwing in the towel? The key is to be pragmatic. Start small. Please, don't try to apply TDD to that horrifying, ten year old legacy feature on your first day.

Think of it like learning an instrument. You don’t start with a complex symphony; you start with scales and simple chords. TDD is no different. Build that muscle memory on small, isolated bits of code first.

Here are a few strategies that genuinely helped me get over the hump:

- Embrace Mocks and Stubs: For those nasty external dependencies, get comfortable with tools like Python's

unittest.mock. Mocks and stubs are your best friends. They let you fake the behavior of databases or APIs, so you can test your logic in a clean, isolated environment without all the external noise. - Focus on Behavior, Not Implementation: Before writing a test, always ask yourself, "What is the observable result of this code?" A solid test verifies that outcome, not the specific steps the code took to arrive there. This makes your tests far more resilient to refactoring.

- Start with Pure Functions: Your TDD training wheels should be "pure functions"—functions with no side effects. They take an input, return an output, and that's it. They are the easiest things in the world to test and are perfect for getting comfortable with the Red Green Refactor cycle in a low stakes way.

The goal isn't to become a TDD purist overnight. It’s about gradually building a new habit that, trust me, pays off massively in the long run, both in your code quality and your own confidence as a developer.

Key Takeaways on Test Driven Development

We’ve covered a lot of ground here—from the late night anxiety of pushing a bug to production all the way to the disciplined, steady rhythm of the Red Green Refactor cycle.

Now, let's tie it all together. Let’s make this real.

At its heart, TDD is less about testing and more about design. It flips the script, turning tests from a chore you do at the end into a tool you use from the very beginning. The process itself is almost deceptively simple, but the impact is profound.

What to Remember

- The Red Green Refactor Cycle: This is the heartbeat of TDD. You start by writing a test you know will fail (Red). Then, you write the absolute minimum code required to make it pass (Green). Finally, you clean up your mess with the confidence that your tests have your back (Refactor).

- Better Code, Naturally: Because you're forced to think about how you'll use the code before you even write it, TDD nudges you toward building things that are more modular and easier to maintain.

- A Safety Net You Can Trust: That growing suite of tests becomes your safety net. It gives you the courage to refactor mercilessly and add new features without that constant fear of breaking something hiding in the shadows.

- Documentation That Doesn't Lie: Your tests become a living, breathing form of documentation. They don't just say what the code should do; they prove what it actually does, every single time you run them.

The most important first step is a small one. You don't need to rewrite your entire application with tests. Just pick one small thing.

Seriously. It could be a single new feature on your current project. Or maybe a tiny personal project you knock out over a weekend.

The goal isn't perfection; it's to get a feel for the rhythm. You're building muscle memory in a low pressure environment.

And if you're looking to really accelerate that learning with some hands on guidance, you might be interested in this practical workshop on mastering Test Driven Development in Django using factory_boy and faker.

Now, I'd love to hear from you. What were your biggest "aha!" moments when you first gave TDD a shot? What roadblocks did you hit? Drop your experiences in the comments below.

Frequently Asked Questions About TDD

Even after seeing the whole TDD cycle in action, a few practical questions always seem to pop up. Let's tackle some of the most common ones I hear from teams trying to figure out what test driven development really means for their day to day work.

Does TDD Replace All Other Types of Testing?

That's a great question, and the answer is a firm no. TDD isn't a silver bullet that magically makes all other testing obsolete. It's better to think of it as building a rock solid foundation for your application.

The tests you write during the TDD flow are almost always unit tests. Their job is to check tiny, isolated pieces of code in a vacuum. They're fantastic for proving that a single function or class does exactly what you expect, but they can't tell you if all those pieces play nicely together.

You will still absolutely need other layers of testing:

- Integration Tests: These make sure different parts of your system can actually talk to each other. Can your app correctly query the database? Does the payment gateway integration work?

- End to End Tests: These simulate a real user's journey through the entire application. They ensure everything hangs together, from the user clicking a button in the browser all the way to the backend processing the request and back again.

TDD is your first and most fundamental layer of defense, not the only one.

Can I Use TDD on an Existing Project Without Tests?

Trying to apply TDD to a mature, legacy codebase can feel like trying to change a tire on a moving car. It’s definitely tricky, but it's not impossible. The secret is to avoid trying to boil the ocean.

Don't start with the heroic goal of writing tests for the entire application. That path leads to burnout. Instead, be strategic. The next time you need to fix a bug or add a small new feature, use that as your entry point.

Before you touch a single line of production code, write a test that either reproduces the bug or defines the new feature's behavior. Watch it fail. Then make your changes and see that test turn green. This technique, sometimes called "characterization testing" for legacy code, slowly but surely builds a safety net around the parts of the system you're actively working on.

Over time, you'll carve out well tested corners of the codebase that you can refactor and improve with real confidence.

Is TDD Suitable for Every Type of Project?

While TDD is incredibly powerful, it's not the perfect tool for every single job. It shines brightest in projects where you have clear, well defined requirements for what the code needs to do.

But there are situations where a "test first" approach might just slow you down. For instance, in the very early stages of exploratory prototyping, your main goal is often to hack something together quickly, try out ideas, and see what sticks. The requirements are fluid, and the code is probably disposable. Writing tests first in this kind of chaotic, creative phase can stifle momentum without adding much real value.

Likewise, for a simple, one off script, the overhead of setting up a test suite might be more trouble than it's worth. The art of good engineering isn't just knowing how to use your tools; it's knowing when to use them.

Are you an early stage startup looking to build a robust, scalable, and maintainable application from the ground up? Kuldeep Pisda specializes in implementing best practices like TDD to give your product the strong technical foundation it needs to succeed. Let’s build something great together.

Become a subscriber receive the latest updates in your inbox.

Member discussion