Ever poured weeks into a feature that matched the spec sheet perfectly, only to watch it land with a thud? I have. It's a gut wrenching feeling. You followed the map, but the map led you off a cliff.

This is the silent killer of so many projects: we focus so intensely on building the thing right that we forget to ask if we're even building the right thing. "Testing software requirements" is the formal name for this gut check. It's the process of interrogating your plan before the first line of code is ever written, saving you from the nightmare of building beautiful software that nobody actually wants.

Why We Still Build Software Nobody Wants

My ghost in the machine story? Early in my career, my team built a beautiful, complex reporting dashboard for a client. We were meticulous. Every requirement was ticked off, every bullet point addressed. The problem? The requirements themselves were based on a complete fantasy of how the end users did their jobs. We built the right software for the wrong reality.

Caption: We have all been this person.

That experience taught me a hard lesson: the most expensive bugs are not in the code, they are in the requirements document. They are the ghosts that haunt a project from day one, leading to wasted effort, plummeting team morale, and a serious loss of user trust. Often, this mess starts with a broken process, like using faulty feature prioritization frameworks that point you in the wrong direction from the get go.

Shifting from "Did We Build It Right?" to "Are We Building the Right Thing?"

This shift in perspective is everything. It's the soul of effective requirements testing. We are not just proofreading a document for typos; we are collectively cross examining the ideas behind the words.

For many founders, this is a lesson learned the hard way. Getting a handle on these nuances early can make a huge difference, and there are plenty of crucial things to know before starting a startup that can help you sidestep these common traps.

The true cost of vague or incorrect requirements is a tidal wave that swamps more than just your engineering team. Think about the ripple effects:

- Wasted Design and QA Cycles: Your team burns precious time designing, building, and testing something that will ultimately need a major overhaul or be scrapped entirely.

- Damaged Team Morale: Honestly, nothing is more soul crushing than pouring your heart into work that does not matter. It creates a sense of futility and is a fast track to burnout.

- Eroded Stakeholder Confidence: When projects constantly miss the mark, it kills the trust stakeholders have in the team's ability to deliver real value.

The goal isn't just to validate a document; it's to create a shared understanding. Before you write a single test case, before you architect a solution, you have to test the idea itself.

This guide is about that journey. We are going to move that validation process to the very beginning of the development lifecycle. We will get into practical, actionable ways to ensure that what's written down truly reflects the business need and user expectations, turning ambiguous requests into a rock solid foundation for your project.

How to Decode and Review Requirements Documents

Before you can test software, you have to become a detective. A requirements document is not a simple checklist; it is a map full of clues, hidden assumptions, and the occasional booby trap. I once lost an entire afternoon debating the word "instantly" with a project manager. To them, it meant "fast." To engineering, it implied a real time system that would triple the project's complexity.

This is exactly where static testing comes in. It's the process of reviewing the requirements document itself, long before a single line of code exists. It's about finding those "instantly" moments and getting clarity before they unleash chaos. Your goal is to spot ambiguity, hunt for contradictions, and question everything.

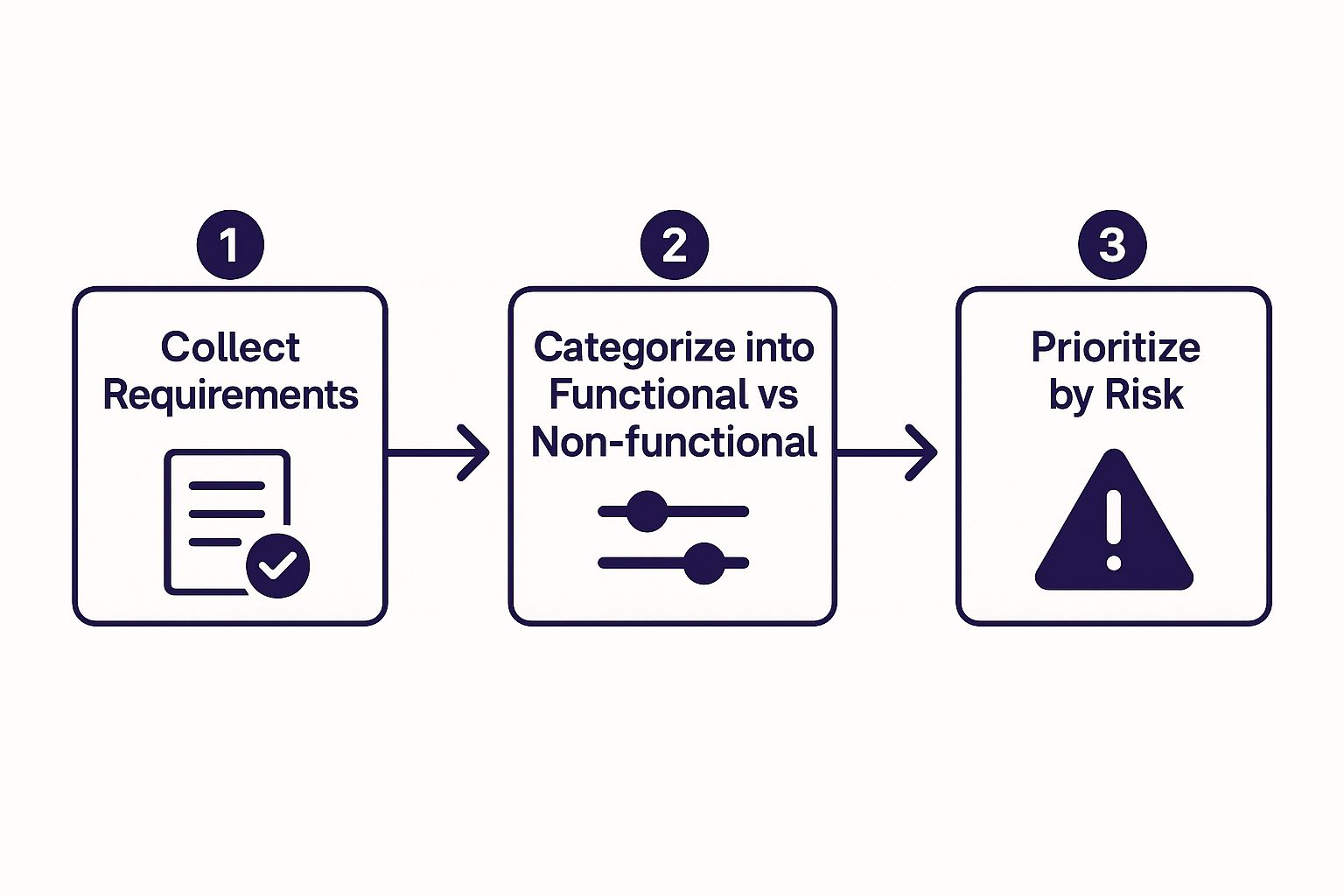

Let's pause and reflect. This infographic breaks down the initial flow, from gathering requirements to figuring out which ones need the most attention first.

This simple process ensures that high risk items, both functional and non functional, get the most scrutiny right from the start.

The Power of a Peer Walkthrough

One of the most effective, and surprisingly simple, static techniques is the humble peer walkthrough. This is not a formal, high pressure presentation. It's a collaborative session where the requirements author guides a small group of peers through the document. Think developers, QA engineers, maybe a designer.

The goal isn't to find blame; it's to build a shared brain. Everyone brings their own unique lens to the problem. A backend developer might immediately spot a database query that's impossible to implement efficiently, while a UI designer points out a user flow that feels clunky or confusing.

A requirement isn't "good" until at least three different roles on the team can read it and describe the same outcome. If their descriptions don't match, the requirement has failed its first test.

This early collaboration is critical for catching issues before they become expensive problems. In a similar vein, when you're dealing with API endpoints, having a crystal clear contract is vital. For some hands on advice, check out our guide on how to validate the raw JSON post request body in Django, which dives into ensuring data integrity from the very beginning.

Static review techniques are the first line of defense against building the wrong thing. While they all aim to find defects before coding starts, they each have their sweet spots.

Requirement Review Techniques Compared

| Technique | Best For | Key Advantage | Potential Pitfall |

|---|---|---|---|

| Peer Walkthrough | Building shared understanding and catching cross functional issues. | Highly collaborative; uncovers different perspectives quickly. | Can get sidetracked without a strong facilitator. |

| Technical Review | Deep diving into complex technical or architectural requirements. | Ensures feasibility and uncovers implementation challenges early. | Might miss the bigger picture or user facing issues. |

| Formal Inspection | Mission critical features or regulated industries. | Extremely thorough and process driven with defined roles. | Can be slow and bureaucratic for smaller projects. |

| Ad hoc Review | Quick feedback on a small, isolated requirement. | Fast and requires minimal coordination. | Lacks structure; quality depends heavily on the reviewer. |

Choosing the right technique depends on the complexity and risk of the requirement at hand. For most teams, a mix of peer walkthroughs and ad hoc reviews covers the bases without slowing things down too much.

Creating Your Ambiguity Checklist

After a while, you start to see patterns in poorly written requirements. Vague adjectives, undefined terms, and passive language are all massive red flags. This is where keeping a checklist helps turn the review process from a vague art into a repeatable science.

Here's a practical checklist you can adapt for your own team:

- Vague Words: Hunt down subjective terms like "fast," "easy to use," "robust," or "user friendly." Always demand concrete, measurable numbers. "Fast" should become "page loads in under 500ms on a 4G connection."

- Logical Contradictions: Does one requirement directly conflict with another? For example, "User data must be fully encrypted at rest" and "Admins must be able to view user passwords" are completely incompatible.

- Unstated Assumptions: Look for what isn't said. Does a feature assume the user is logged in? Does it rely on a specific user role or permission level that isn't mentioned?

- Completeness Check: Does the requirement cover the unhappy paths? What happens when a user enters invalid data, or what if they lose their internet connection midway through a process?

The rise of DevOps has made this kind of continuous validation more critical than ever. Today, about 75% of technology teams integrate DevOps practices, which means embedding these quality checks at every single stage. This leads to around 40% faster release cycles simply because issues are caught in the document phase, not in production. You can dig into more of these trends in recent software testing market reports.

By adopting this detective mindset early on, you stop bugs before they're even written.

Making Requirements Real with Dynamic Testing

Reading a document is one thing. Seeing an idea in action? That's where the real aha moments happen. This is the whole point of dynamic testing for requirements. It's about moving past static documents and bringing an idea to life long before a single developer gets a ticket.

I once worked on a project where a workflow looked flawless on paper. The sequence was logical, the inputs were clear, and the outputs made sense. Then we built a quick, clickable wireframe, maybe four hours of work, and immediately found a massive flaw. The user had to bounce between three different screens to complete what should have been a single, simple task.

That simple prototype saved us weeks of rework. Finding that issue in the codebase would have been a refactoring nightmare. This is the power you get when you make requirements tangible.

From Whiteboards to Interactive Mockups

Prototyping is not about building a mini version of the final software. It's about creating just enough of an experience to see if a concept actually holds water. This can range from super simple sketches to more polished, interactive models.

- Low Fidelity Prototypes: Think whiteboard drawings or paper sketches. These are fast, disposable, and perfect for hammering out core user flows without getting bogged down in visual details.

- Wireframes: This is the skeleton of an interface. They focus on layout, information hierarchy, and function, deliberately leaving out color and branding to keep the conversation centered on the workflow.

- Interactive Mockups: Using tools like Figma or Balsamiq, you can create clickable prototypes that closely simulate the final user experience. Stakeholders can actually click through screens and get a real feel for how the application will work.

The whole point of a prototype is to learn, not to build. The cheaper and faster you can learn, the more risk you pull out of the project.

This kind of hands on validation is a crucial part of a healthy development cycle. In many ways, it echoes the core ideas of Test Driven Development, where you define the expected behavior before you build the implementation. For a deeper dive, check out our guide on what is Test-Driven Development and how it leads to saner coding.

The Growing Importance of Early Validation

The global software testing market is a clear sign of how seriously companies are taking quality. It was valued at over USD 54.68 billion by 2025 and is projected to climb towards USD 100 billion by 2035. This growth is directly tied to the absolute need for rock solid software.

Dynamic testing through prototyping gets right to the heart of this by catching fundamental design and usability flaws when they are cheapest to fix. It's a proactive, not reactive, approach to quality.

To sharpen your process even further, it's worth exploring a comprehensive guide on software testing best practices that can complement your prototyping work. By creating a tangible model, you give everyone something real to react to, ensuring you're building the right thing from day one.

Building a Simple and Effective Traceability Matrix

Let's be honest, the term "traceability matrix" sounds incredibly corporate and a little intimidating. It conjures up images of massive, unreadable spreadsheets that nobody ever actually looks at, let alone updates.

But what if I told you it's really just a simple map? It's a tool for connecting the dots between a business need, the specific requirement that came from it, and the test case that proves you built it right. Nothing more, nothing less.

I once worked on a project where a small, seemingly innocent feature just kept growing. Day after day, new edge cases and "what ifs" were tacked on. It wasn't until we finally built a traceability matrix that we saw the horror: this feature had no original business requirement attached to it. It was a "rogue" feature born from a side conversation, and it was causing some serious scope creep.

That's the real magic of this tool. It forces everyone to answer two fundamental questions: "Why are we building this, and how will we prove it works?"

What a Simple Matrix Needs

Forget the complicated templates you might find online with dozens of columns. A truly useful traceability matrix only needs a few key pieces of information to provide immense value. It's all about creating a clear line of sight from the original request to the final validation.

A barebones structure that gets the job done looks like this:

- Requirement ID: A unique code for each requirement (e.g., REQ 001).

- Requirement Description: A short, clear summary of what needs to be done.

- Business Need/User Story: The "why" behind it all. What problem does this solve?

- Test Case ID: The unique code for the test case that validates this requirement (e.g., TC 001).

- Test Case Status: A simple status like Pass, Fail, or Not Run.

This isn't about creating bureaucracy. It's about building a project's source of truth. When a stakeholder asks if a specific need is covered by the current test plan, the matrix gives you a definitive, immediate answer.

Putting It All Together

Let's imagine we're building a simple login system. Our traceability matrix would start to look something like this:

| Requirement ID | Requirement Description | Business Need | Test Case ID | Test Status |

|---|---|---|---|---|

| REQ 001 | User can log in with a valid email and password. | As a registered user, I need to access my account securely. | TC 001 | Pass |

| REQ 002 | System shows an error for an invalid password. | As a user, I need clear feedback when my login fails. | TC 002 | Pass |

| REQ 003 | User account is locked after 5 failed attempts. | As a platform owner, I need to prevent brute force attacks. | TC 003 | Not Run |

Suddenly, everything is connected. REQ 003 is clearly linked to a critical security need, and we can instantly see that its corresponding test case hasn't been executed yet.

This simple table transforms testing software requirements from an abstract idea into a concrete, trackable process. It ensures every single piece of work has a clear and documented purpose.

Lessons Learned from Real World Projects

Theory is clean, but real projects are almost always messy. The perfectly structured workflows we design often collide with the chaotic reality of human communication, shifting priorities, and simple misunderstandings. My time in the trenches has taught me that the most dangerous bugs aren't in the code; they're in the assumptions we make before writing a single line of it.

One of the costliest lessons I've learned is the danger of not asking "why" enough times. I once led a team that built a beautiful, intricate feature for a stakeholder. We delivered it, and they were thrilled. The problem? Nobody on their team ever used it. We had perfectly executed a request without ever digging into the underlying business problem it was supposed to solve.

We built what they asked for, not what they needed. That distinction is the fragile line between a successful project and a beautiful waste of time.

This experience really drives home the immense value of rigorous quality assurance. The global software testing industry has surged past a market value of $45 billion, with many companies now dedicating up to 40% of their development budgets to testing and QA. This isn't just about finding bugs; it's about validating the entire purpose of the software from the ground up. You can dig into more of these trends in recent global software testing industry statistics.

The Curse of Gold Plating

Another common pitfall I see all the time is "gold plating." This is when a developer adds extra functionality or polish that wasn't requested, believing it will add value. While the intention is often good, it's a direct route to scope creep and missed deadlines.

I remember a junior developer who spent two extra days building a slick animation for a button. It looked great, but it wasn't a requirement, and it introduced a subtle bug on older browsers. Effective testing software requirements means sticking to the script and verifying only what has been agreed upon. Anything else is a distraction.

Misinterpreting Stakeholder Feedback

Communication breakdowns can derail even the clearest requirements. Stakeholders often speak in terms of outcomes, not technical specs. When they say, "Make it more intuitive," it's our job as analysts and developers to translate that into specific, testable criteria. Does it mean reducing clicks? Adding tooltips? Simplifying the layout?

Here are a few warning signs I've learned to watch for in meetings:

- Vague Feedback: Phrases like "I don't like it" or "it feels clunky" are useless without follow up questions. Always press for specifics.

- Unstated Assumptions: Everyone in the room might have a different mental model of a feature. Always draw it out on a whiteboard or use a wireframing tool.

- Silence Isn't Agreement: A stakeholder who doesn't speak up in a review meeting isn't necessarily happy. More often than not, they're confused or disengaged. Direct questions are your best friend here.

The biggest lesson is this: requirements testing is fundamentally a human process. It's about curiosity, clarity, and relentless communication to ensure you're building something that truly matters.

Even with a solid plan, a few questions always seem to surface. Let's dig into some of the most common ones I hear from teams who are getting serious about testing their software requirements.

How Do You Handle Changing Requirements In Agile?

This is the big one, isn't it? In an Agile world, change is not a problem to be avoided; it's a reality to be embraced. The trick is to stop thinking about requirements testing as a one time gate at the beginning of a project. Instead, you need to see it as a continuous loop.

Rather than a massive, formal sign off that happens once, you validate requirements for each user story right before it enters a sprint. This approach breaks the review process into smaller, faster, much more digestible chunks. This is where a traceability matrix becomes your best friend, it lets you instantly see how a single change ripples through your existing test cases and development work.

The goal in Agile isn't to prevent change. It's to make the cost of that change as low as humanly possible. Continuous validation is how you get there.

What Are The Best Tools For Testing Requirements?

Honestly, this is a bit of a trick question. The best "tool" is rarely a piece of complex software. More often than not, it's a whiteboard, a candid peer review session, or even a simple wireframing app. The conversation is the real tool.

That said, for managing and tracking the requirements themselves, tools like Jira or Confluence are pretty much standard. When it comes to the actual testing part, simpler is almost always better.

- For Prototyping: A tool like Balsamiq is fantastic for whipping up low fidelity wireframes that get the conversation started. For something that feels more like a real product, Figma is the undisputed industry leader for high fidelity, interactive mockups.

- For Traceability: Don't overcomplicate this. Seriously. A well organized Google Sheet or Excel file is often more than enough for most teams to build a simple, effective traceability matrix.

Remember, the tool should serve the conversation, not become the conversation.

How Do I Get Stakeholder Buy In For Reviews?

It's a classic scenario. Stakeholders sometimes view requirements reviews as a frustrating delay. They're eager to see code being written, not more documents being discussed. The most effective way I've found to get their buy in is to frame these reviews in terms of risk and money.

Bring up a past project that was delayed or a feature that flopped because of a simple misunderstanding. Point out that a two hour review session now can easily save two weeks of painful rework later.

Use prototypes to make the requirements real for them. It is infinitely easier for a non technical stakeholder to spot a problem in a visual mockup than in an abstract requirements document. Show them the future, don't just tell them about it.

Feeling confident about your requirements but need help turning them into a production grade application? As a full stack engineering consultant, Kuldeep Pisda helps startups accelerate their roadmaps and build robust, scalable systems. Let's talk about building the right thing, the right way. https://kdpisda.in

Become a subscriber receive the latest updates in your inbox.

Member discussion